Traffic footage taken in the Yerba Buena Island Tunnel of the San Francisco–Oakland Bay Bridge Complex showed a previously reported eight-car pile-up after a Tesla Model S with the “Full Self-Driving” software malfunctioned and abruptly stopped in the fast lane.

KRON 4 reported the traffic accident happened on Thanksgiving Day. The crash resulted in nine people being injured–including a two-year-old who was hospitalized after suffering a bruise and an abrasion to the rear left side of his head.

The accident footage and photos were obtained by The Intercept via a California Public Records Act request.

It showed the first direct look at what happened from two different perspectives, confirming witness accounts the Tesla went into the far left lane at a high speed with the flow of traffic and then stopped for no reason.

The same day—just hours before the accident—Tesla CEO Elon Musk congratulated Tesla employees on a "major milestone” after announcing the vehicle's “Full Self-Driving” capability was made available in North America.

According to the Austin, Texas-based automotive and clean energy company, the Autodrive feature became available to over 285,000 Tesla owners in North America.

According to a report, the Tesla Model S driver behind the wheel during the crash told the California Highway Patrol the vehicle was in "Full Self-Driving" mode while traveling at 55 miles per hour.

The driver claimed the vehicle unexpectedly—of its own accord—switched into the far-left lane and slowed to 20 m.p.h., resulting in the pile-up.

A June 15, 2022 article from the Washington Post reported there had been 273 reported crashes involving Tesla vehicles using its Autopilot software over roughly the past year.

The numbers published by the National Highway Traffic Safety Administration (NHTSA) at the time showed:

"Tesla vehicles made up nearly 70 percent of the 392 crashes involving advanced driver-assistance systems reported since last July, and a majority of the fatalities and serious injuries—some of which date back further than a year."

The Tesla Autopilot feature allows drivers to cede physical control of their electric vehicles, but they must be actively paying attention.

While activated, the automated vehicles are supposed to be able to maintain a safe distance, stay within lanes and make lane changes while sharing the highway with other vehicles.

The “Full Self-Driving” beta is part of an expanded feature of the Autopilot software.

It allows the vehicles to maneuver city and residential streets and stop at stop signs.

Tesla has been scrutinized by transportation safety experts who are concerned about the Autopilot technology's safety since its AI is being tested and trained along with other drivers on public roads.

Complaints of "unexpected brake activation" or what drivers call "Phantom Braking"–where Tesla vehicles independently slam on the brakes at high speeds–have increased in recent months.

The Washington Post reported roughly 100 such complaints have been filed within a three-month period.

Since 2016, the NHTSA investigated a total of 35 crashes where Tesla’s “Full Self-Driving” or “Autopilot” systems were likely being used.

The combined total of deaths from those accidents was 19 people.

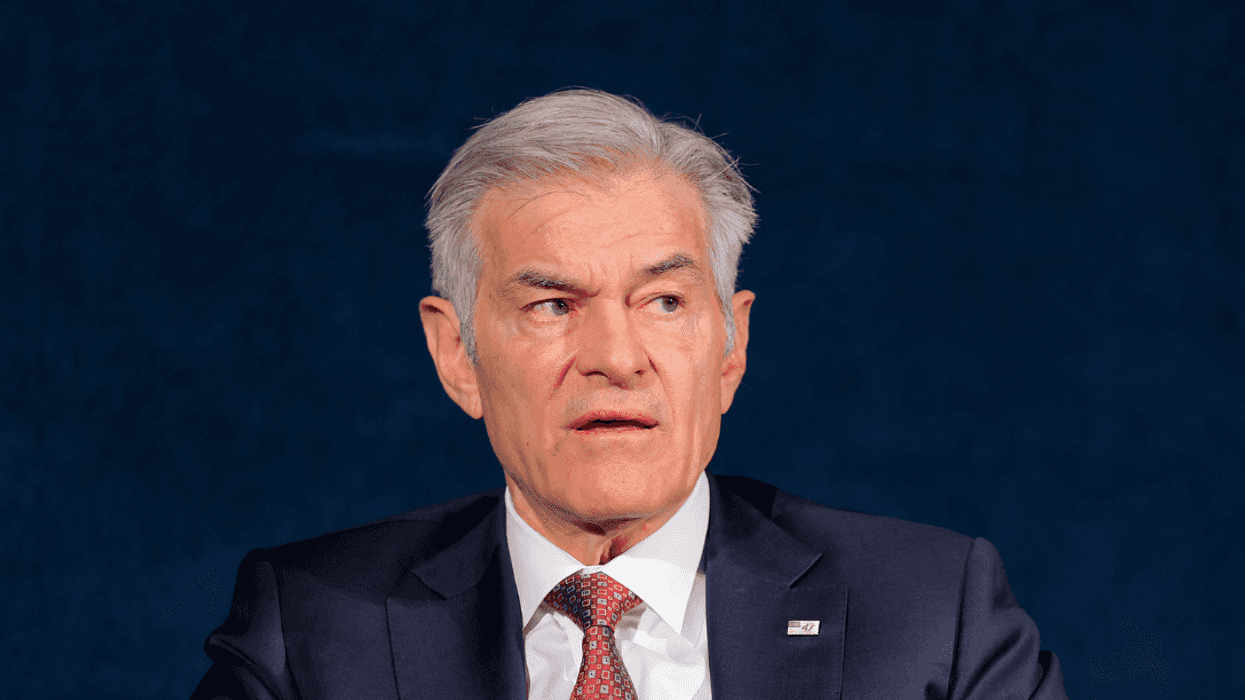

NHTSA’s administrator, Steven Cliff, expressed caution about the feature.

Cliff said:

“These technologies hold great promise to improve safety, but we need to understand how these vehicles are performing in real-world situations."

As more car companies are introducing electric motor vehicles to their lineup, Musk endeavored to make Tesla more "compelling" to stay above the tightening competition.

Last June, he proclaimed focusing on the "Full Self-Driving" technology was "essential," adding:

“It’s really the difference between Tesla being worth a lot of money or worth basically zero.”

Awkward Pena GIF by Luis Ricardo

Awkward Pena GIF by Luis Ricardo  Community Facebook GIF by Social Media Tools

Community Facebook GIF by Social Media Tools  Angry Good News GIF

Angry Good News GIF

Angry Cry Baby GIF by Maryanne Chisholm - MCArtist

Angry Cry Baby GIF by Maryanne Chisholm - MCArtist

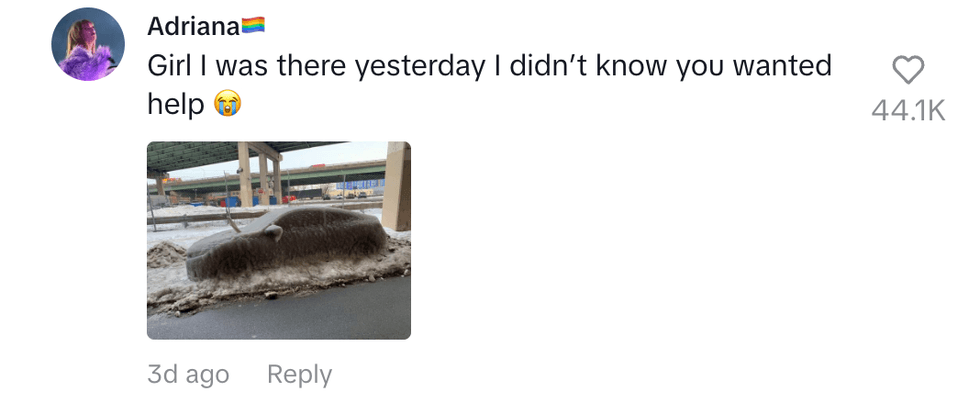

@adriana.kms/TikTok

@adriana.kms/TikTok @mossmouse/TikTok

@mossmouse/TikTok @im.key05/TikTok

@im.key05/TikTok @biontrtwff101/TikTok

@biontrtwff101/TikTok @likebrifr/TikTok

@likebrifr/TikTok @itsashrashel/TikTok

@itsashrashel/TikTok @ur_not_natalie/TikTok

@ur_not_natalie/TikTok @rbaileyrobertson/TikTok

@rbaileyrobertson/TikTok @xo.promisenat20/TikTok

@xo.promisenat20/TikTok @weelittlelandonorris/TikTok

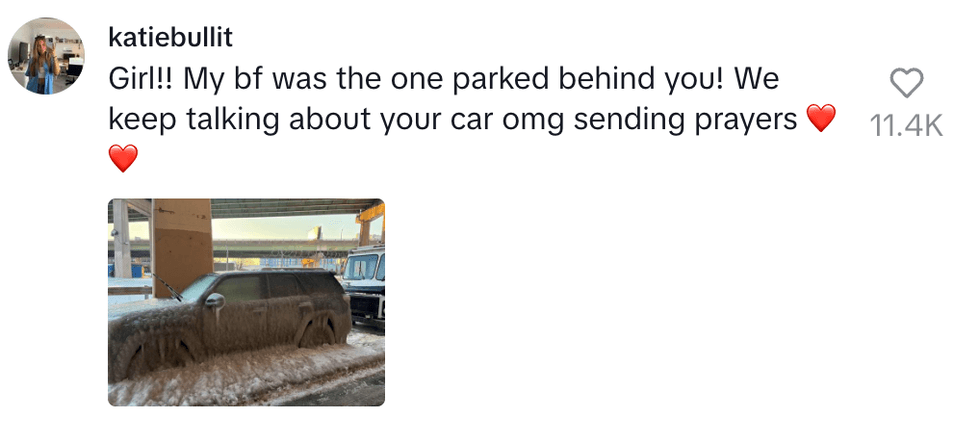

@weelittlelandonorris/TikTok @katiebullit/TikTok

@katiebullit/TikTok @rube59815/TikTok

@rube59815/TikTok

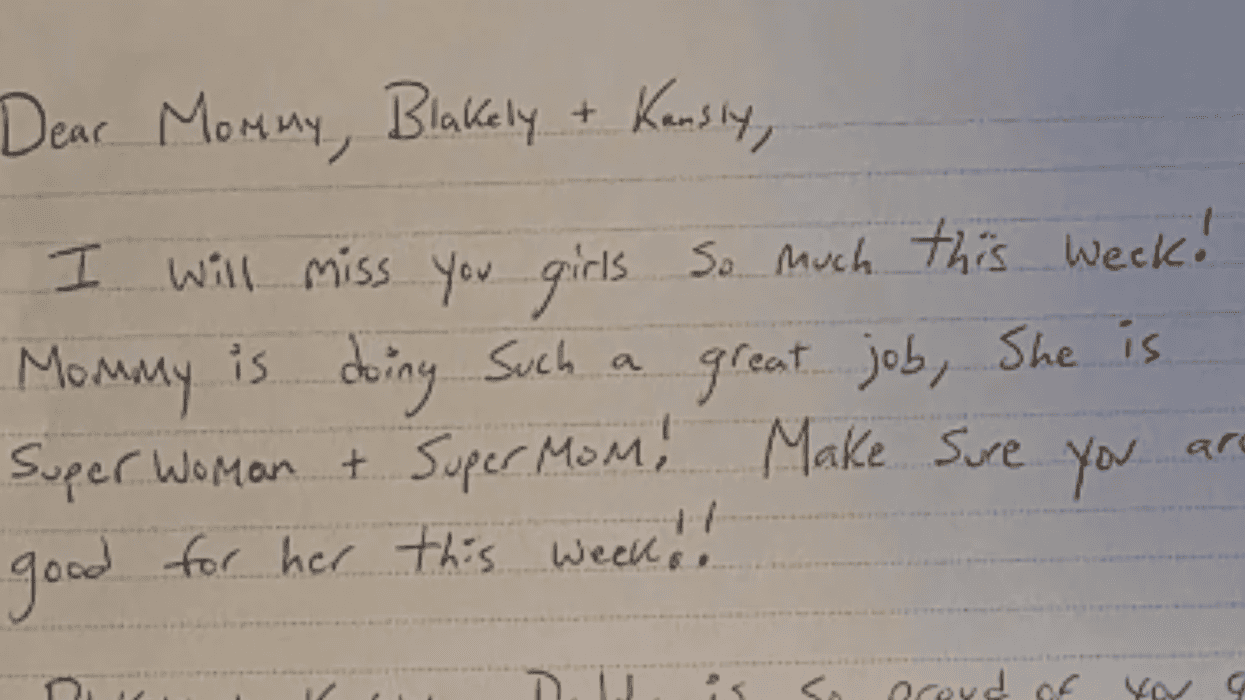

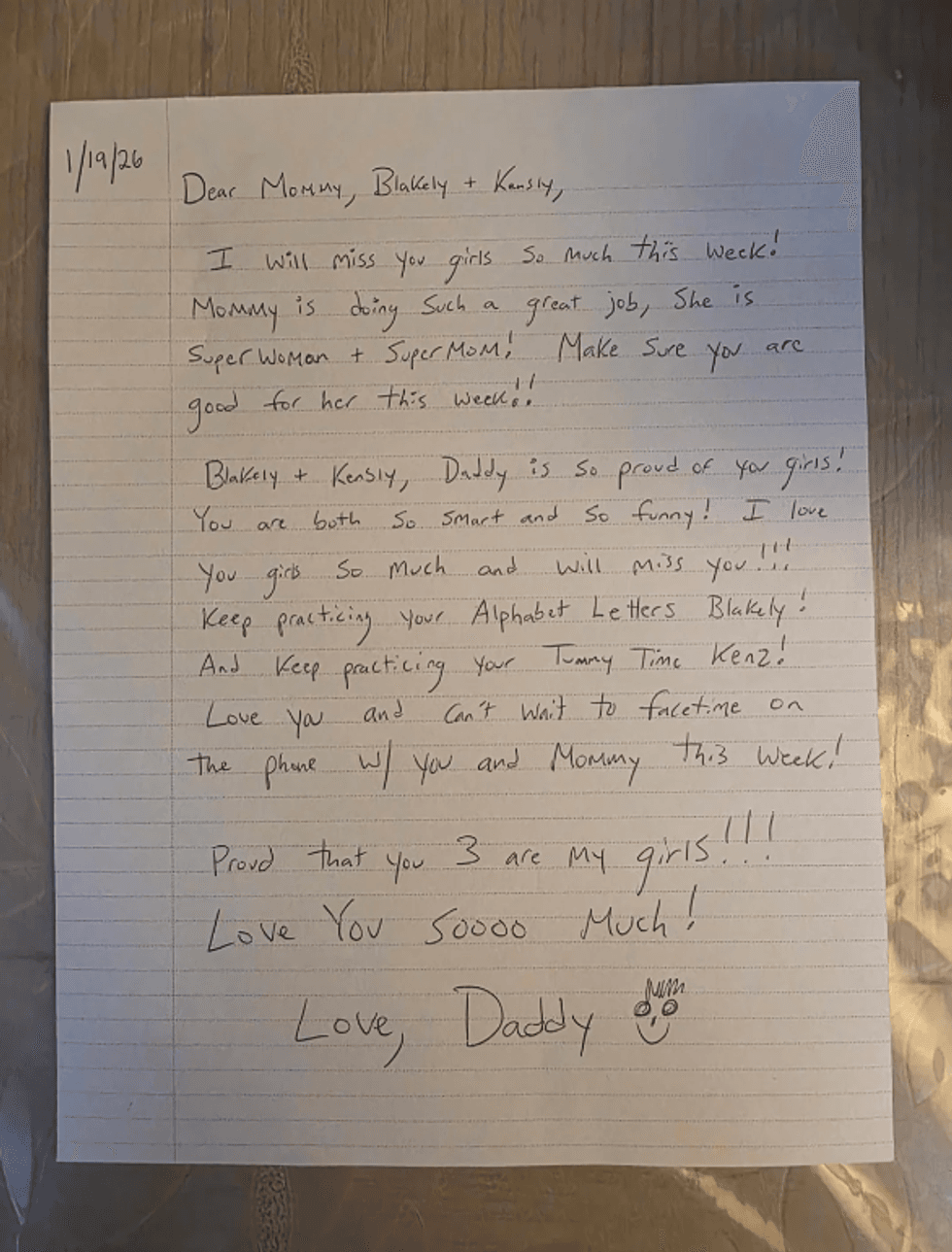

u/Fit_Bowl_7313/Reddit

u/Fit_Bowl_7313/Reddit

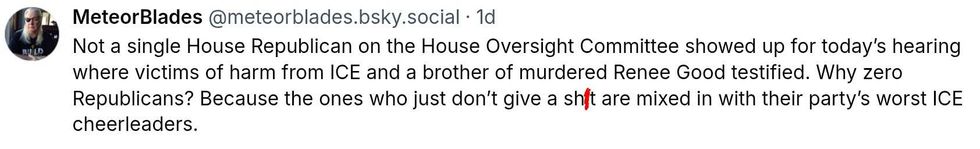

@meteorblades/Bluesky

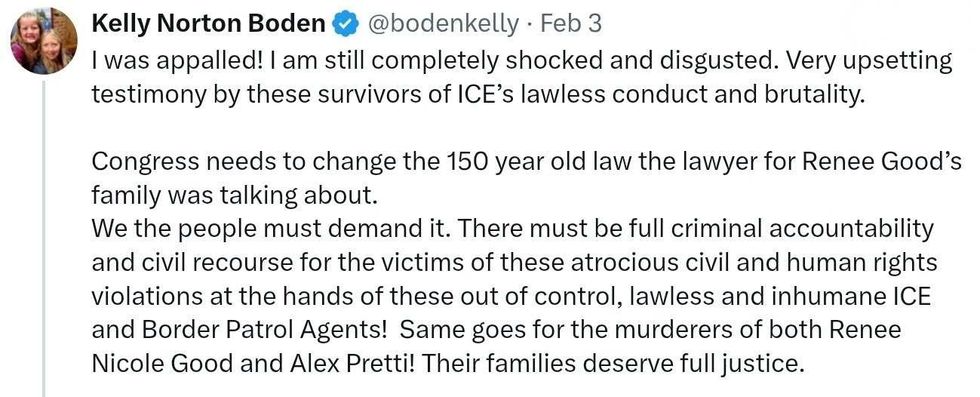

@meteorblades/Bluesky @bodenkelly/X

@bodenkelly/X