While designers of autonomous vehicles (AVs) continue their quest to make them safer, the realities of complex roadways call for complex ethical decisions about who lives or dies. To address the technological version of what’s known as the age-old “trolley problem,” a worldwide study asked questions such as: if one or more pedestrian is suddenly crossing the road, should the AV be programmed to swerve and risk going off the road with its passengers or hit the people head-on?

Most respondents objectively lean toward protecting the greatest number of people, but also show a reluctance to ride in an AV that doesn’t guarantee protection for its passengers as priority number one.

The Study’s Design

Researchers at MIT launched the initial online study in the United States in 2015—an interactive video game that allows people to make ethical choices about how AVs should respond in dangerous situations.

From 2017 to 2018, MIT expanded that reach worldwide to include 4 million people. At the Global Education and Skills Forum in Dubai on March 18, 2018, MIT Professor Iyad Rahwan told the audience that his Moral Machine study is now the largest global ethics study ever conducted. As fate would have it, that was the day before the first United States pedestrian fatality by an AV.

Moral Machine (MM) allows users to choose alternatives when risky road conditions arise, judge which is the most ethically acceptable, and even design alternative solutions from those presented.

MM’s website calls itself: “[a] platform for gathering a human perspective on moral decisions made by machine intelligence, such as self-driving cars.” The instructions explain, “We show you moral dilemmas where a driverless car must choose the lesser of two evils, such as killing two passengers or five pedestrians. As an outside observer, you judge which outcome you think is more acceptable.”

The software creates even more nuanced scenarios, especially if participants click on the button asking to “Show Description,” rather than simply choosing based on the depicted image. The people in each image are described in greater detail: by gender, age, and with characteristics including “homeless” and “executives.” Live animals in the road, such as dogs and cats, are also presented in the situations. People can compare their answers with those of others and discuss them in a comments section.

Results of the Studies

When Rahwan previewed the global studies’ results, which will be published later this year, they largely mirrored the results of the US study: in theory, people wanted autonomous vehicles designed for the greater good, sacrificing the lives of passengers to save the lives of more pedestrians. But they didn’t want to ride in those cars.

When explaining the original US study results, co-author of the study Rahwan said, “Most people want to live in in a world where cars will minimize casualties.” However, he added: “But everybody wants their own car to protect them at all costs.”

Indeed, 76 percent of respondents found it more ethical for an AV to sacrifice one passenger over 10 pedestrians. But that rating fell by one-third when respondents considered the possibility of riding in such a car. In fact, the global study found nearly 40 percent of participants chose to have their own cars run over pedestrians rather than injuring passengers.

The aptly named paper, “The social dilemma of autonomous vehicles,” was published by the journal Science:

“We found that participants…approved of utilitarian AVs (that is, AVs that sacrifice their passengers for the greater good) and would like others to buy them, but they would themselves prefer to ride in AVs that protect their passengers at all costs. The study participants disapprove of utilitarian regulations for AVs and would be less willing to buy such an AV.”

“I think the authors are definitely correct to describe this as a social dilemma,” according to Joshua Greene, professor of psychology at Harvard University. Greene wrote a commentary on the research for Science and noted, “The critical feature of a social dilemma is a tension between self-interest and collective interest.” He said the researchers “clearly show that people have a deep ambivalence about this question.”

The study’s more detailed questions showed clear preferences to protect certain groups and more division in the results as situations grew increasingly complex. Respondents generally chose to spare children’s lives over adults. Furthermore, the more elderly the subject, the more respondents were willing to risk those people’s safety. With multi-faceted situations, such as a pedestrian crossing legally while multiple people crossed illegally, results were evenly split.

Researchers also noted some intriguing cultural differences between eastern and western countries in the global study, with Germany standing somewhat as an outlier. Generally, the preliminary results from the global study indicate that people in Western countries, including the US and Europe, favor the utilitarian ideals of minimizing overall harm, according to Rahwan. However, respondents from Germany ran against the grain from their surrounding neighbors.

“When we compared Germany to the rest of the world, or the east to the west, we found very interesting cultural differences,” said Rahwan. Presumably, these specific differences will be detailed in the study to be published later this year.

In line with Rahwan’s findings, German company Mercedes-Benz executive Christoph von Hugo commenced a scandal with his comments in early October 2016, by stating that the company will prioritize the lives of its AV passengers—even at the expense of pedestrians.

“If you know you can save at least one person, at least save that one,” von Hugo said at the Paris Motor Show. “Save the one in the car. If all you know for sure is that one death can be prevented, then that’s your first priority.”

A few weeks later, von Hugo claimed he was misquoted, attempting to clarify the company’s official position that “neither programmers nor automated systems are entitled to weigh the value of human lives.” He added that the company has no legal authority to favor one life over another in Germany or any other country.

Moving Forward with Ethics in AVs

While society come to terms with the idea that AVs will unavoidably kill people—but hopefully less often than those driven by humans—some important decisions need to be made about the ethical judgments those AVs will make.

Rahwan explained: "The problem with the new system [sic] it has a very distinctive feature: algorithms are making decisions that have very important consequences on human life."

But the goal of the study is not simply to decide what ethical guidelines should drive the AVs’ algorithms, but also what society will require before they are willing to accept the risks and use them.

The researchers acknowledge in the 2016 US study that public-opinion polling on this issue is at a very early stage—indicating current findings “are not guaranteed to persist” if the landscape of driverless cars evolves. However, the early findings in the world-wide study two years later present similar results.

But NuTonomy CEO Karl Iagnemma, whose Cambridge-based company has piloted self-driving taxis in Singapore, questions the value of the detailed study, emphasizing that AV technology is nowhere near ready to distinguish between many of the details tested in the global study.

"When a driverless car looks out on the world, it's not able to distinguish the age of a pedestrian or the number of occupants in a car." Iagnemma, added, "Even if we wanted to imbue an autonomous vehicle with an ethical engine, we don't have the technical capability today to do so."

Likewise, Noah Goodall, a scientist at the Virginia Transportation Research Council, said the intense focus on the rare and dramatic “trolley problem” consumes too much energy. He believes the time would be better spent on typical day-to-day algorithmic issues that still need addressing, including how much space to leave between cars, pedestrians and cyclists.

"All these cars do risk management. It just doesn't look like a trolley problem," Goodall said.

But Rahwan explained, “I think it was important to not just have a theoretical discussion of this, but to actually have an empirically informed discussion.”

With this research, he’s trying to prevent a situation where AVs are designed in such a way that people refuse to buy them, and the life-saving industry collapses as a result.

"There is a real risk that if we don't understand those psychological barriers and address them through regulation and public outreach, we may undermine the entire enterprise," Rahwan explained. "People will say they're not comfortable with this. lt would stifle what I think will be a very good thing for humanity."

Even if people grew more comfortable with the technology, and it improved over time, the study clearly demonstrates that “defining the algorithms that will help AVs make these moral decisions is a formidable challenge.”

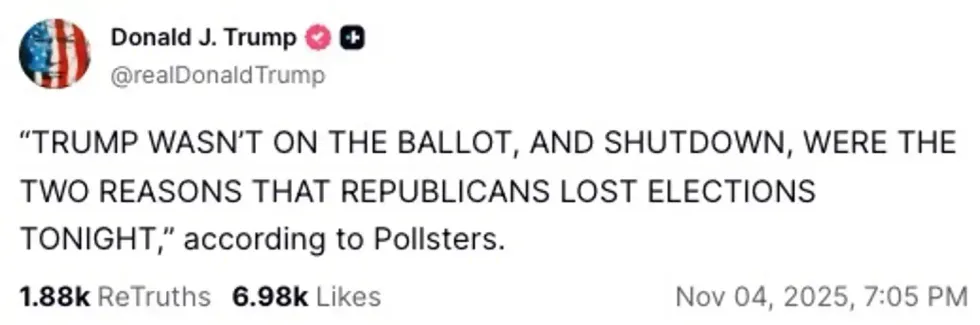

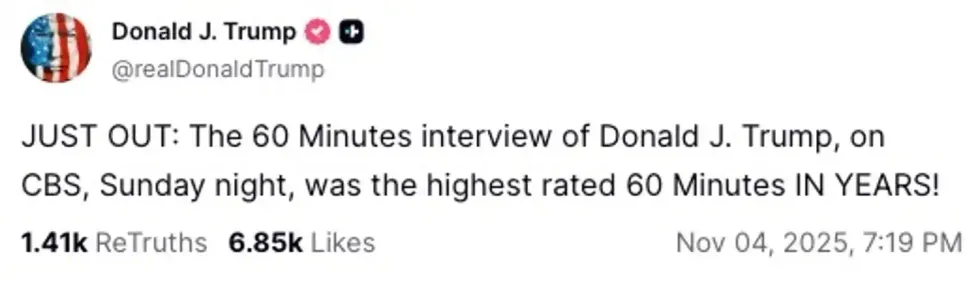

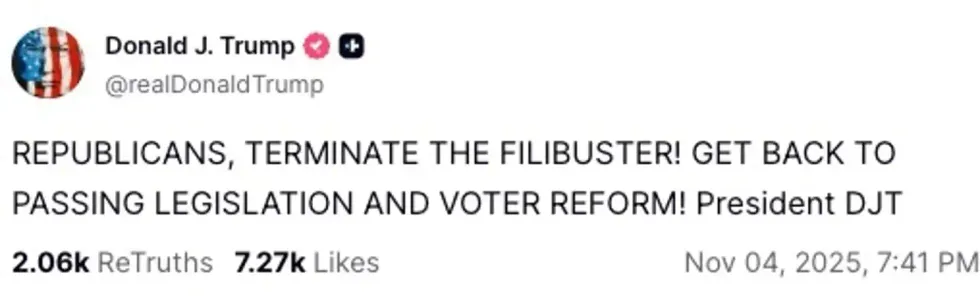

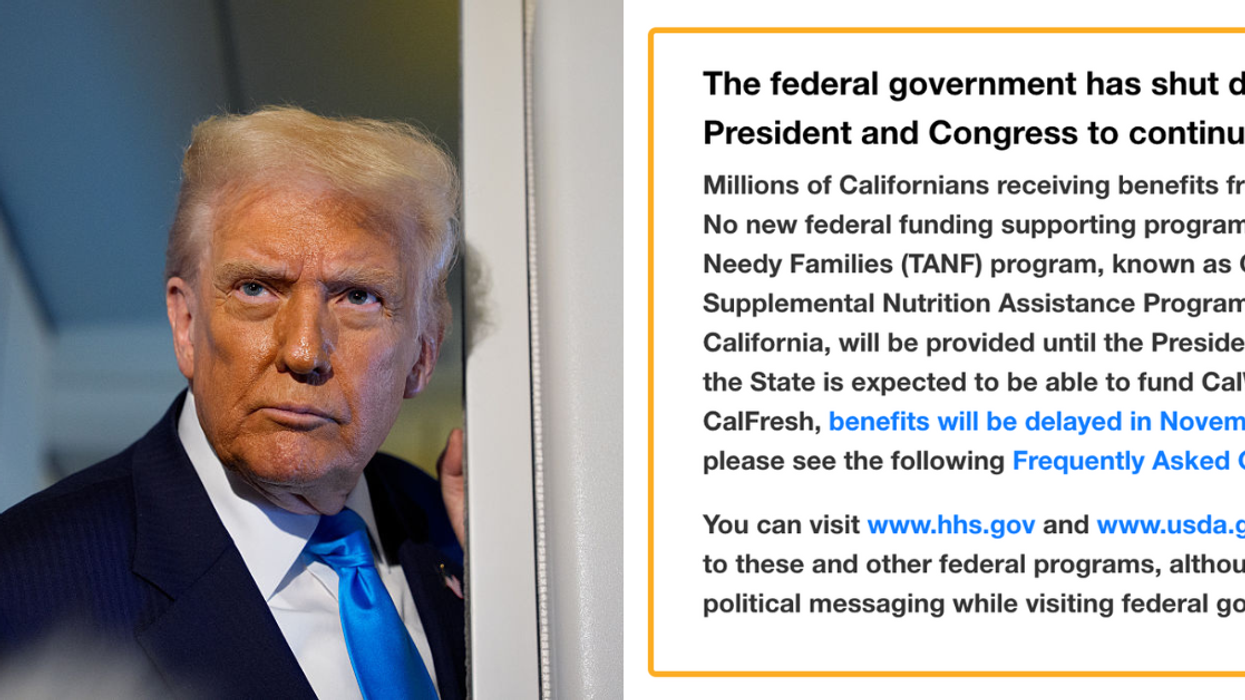

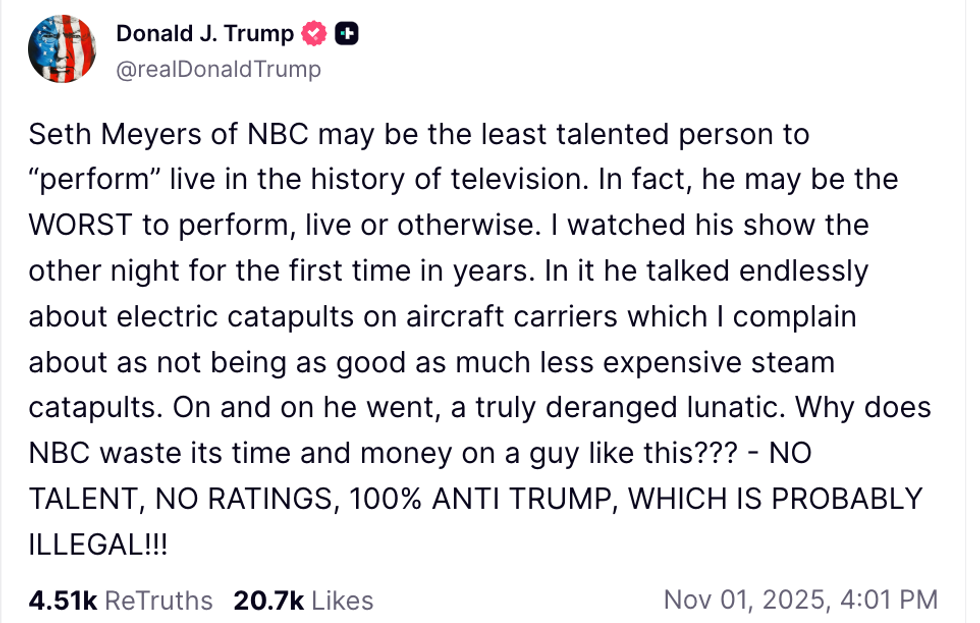

@realDonaldTrump/Truth Social

@realDonaldTrump/Truth Social @realDonaldTrump/Truth Social

@realDonaldTrump/Truth Social @realDonaldTrump/Truth Social

@realDonaldTrump/Truth Social @realDonaldTrump/Truth Social

@realDonaldTrump/Truth Social

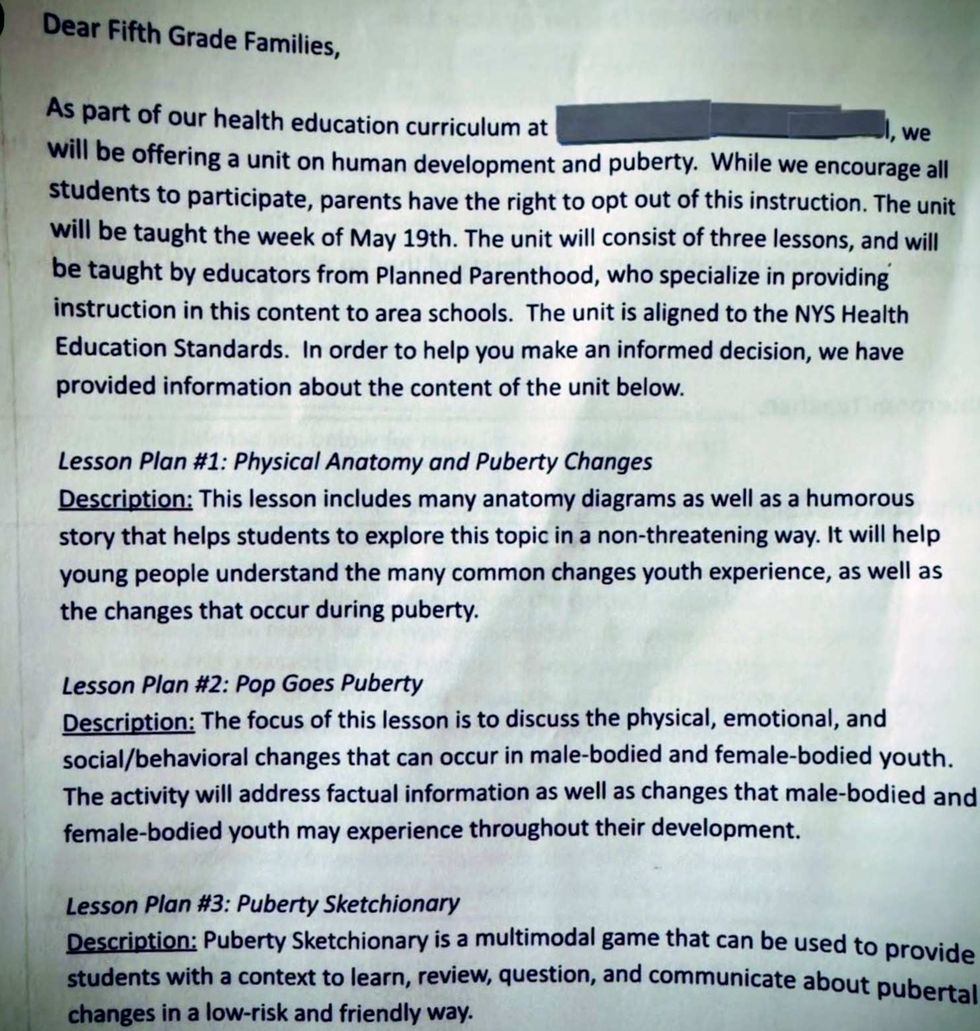

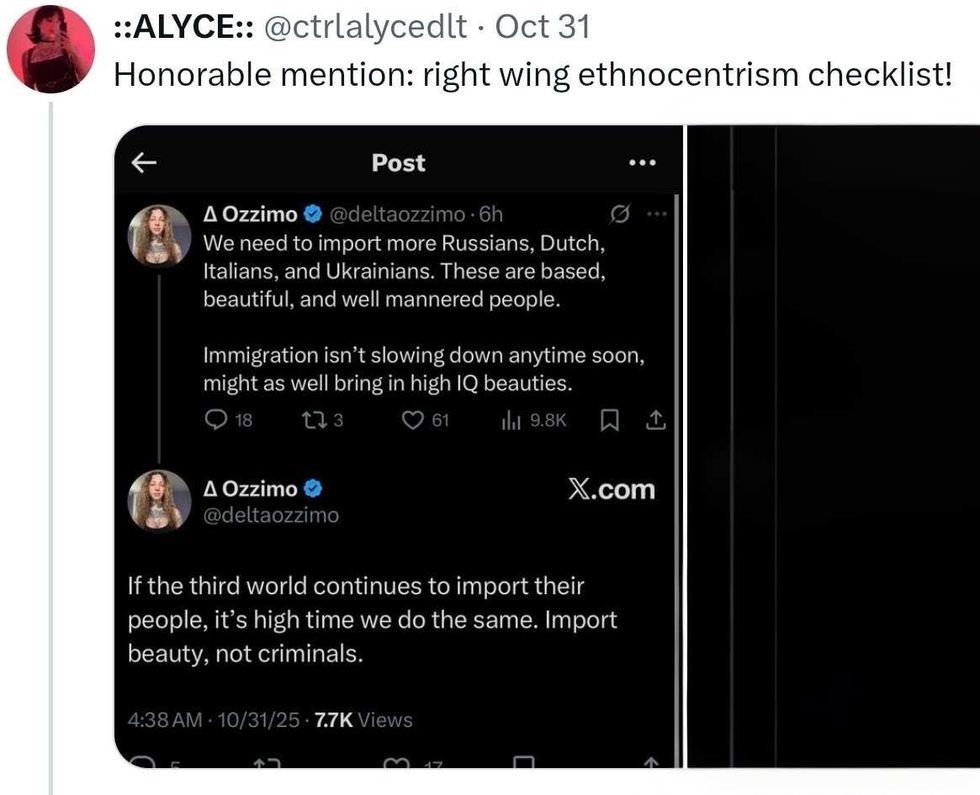

@deltaozzimo/X

@deltaozzimo/X @deltaozzimo/Instagram

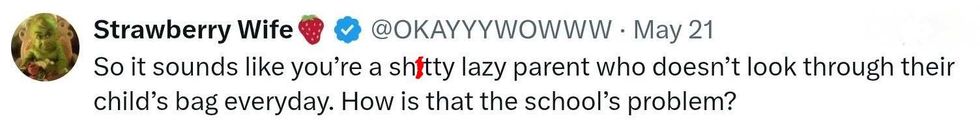

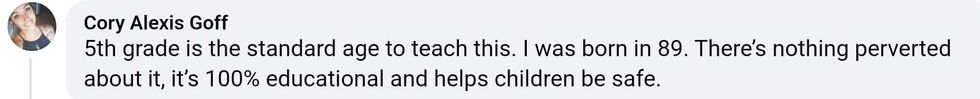

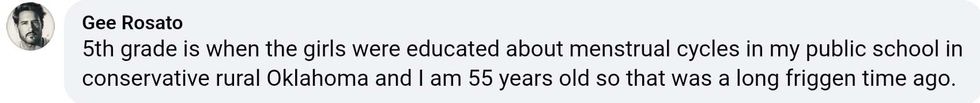

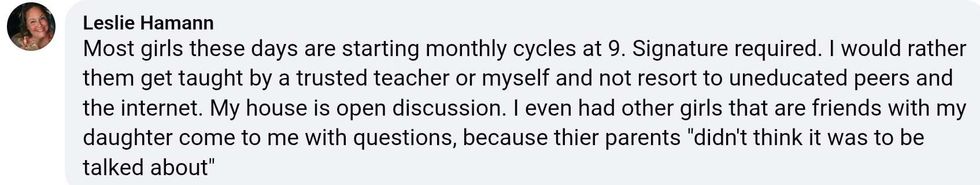

@deltaozzimo/Instagram Sh*tposting 101/Facebook

Sh*tposting 101/Facebook @deltaozzimo/X

@deltaozzimo/X Sh*tposting 101/Facebook

Sh*tposting 101/Facebook Sh*tposting 101/Facebook

Sh*tposting 101/Facebook Sh*tposting 101/Facebook

Sh*tposting 101/Facebook @deltaozzimo/X

@deltaozzimo/X

@realDonaldTrump/Truth Social

@realDonaldTrump/Truth Social

@rootednjoyy/TikTok

@rootednjoyy/TikTok @rootednjoyy/TikTok

@rootednjoyy/TikTok @rootednjoyy/TikTok

@rootednjoyy/TikTok @rootednjoyy/TikTok

@rootednjoyy/TikTok @rootednjoyy/TikTok

@rootednjoyy/TikTok @rootednjoyy/TikTok

@rootednjoyy/TikTok @rootednjoyy/TikTok

@rootednjoyy/TikTok @rootednjoyy/TikTok

@rootednjoyy/TikTok @rootednjoyy/TikTok

@rootednjoyy/TikTok @rootednjoyy/TikTok

@rootednjoyy/TikTok @rootednjoyy/TikTok

@rootednjoyy/TikTok @rootednjoyy/TikTok

@rootednjoyy/TikTok @rootednjoyy/TikTok

@rootednjoyy/TikTok @rootednjoyy/TikTok

@rootednjoyy/TikTok

@rootednjoyy/TikTok

@rootednjoyy/TikTok @rootednjoyy/TikTok

@rootednjoyy/TikTok @rootednjoyy/TikTok

@rootednjoyy/TikTok @rootednjoyy/TikTok

@rootednjoyy/TikTok @rootednjoyy/TikTok

@rootednjoyy/TikTok @rootednjoyy/TikTok

@rootednjoyy/TikTok @rootednjoyy/TikTok

@rootednjoyy/TikTok @rootednjoyy/TikTok

@rootednjoyy/TikTok @rootednjoyy/TikTok

@rootednjoyy/TikTok @rootednjoyy/TikTok

@rootednjoyy/TikTok