If you've been scrolling through Instagram lately, you'll find that many of your friends have been posting selfies that are digital avatar versions of themselves thanks to a popular artificial intelligence app.

The social media trend involves users downloading the Lensa app and paying $7.99–up from $3.99 just days ago–to access its "Magic Avatars" feature, which manipulates selfie images into digital art with themes ranging from pop, to princesses to anime.

But the wildly popular app created by photo-editing tech company Prisma Labs has incurred backlash from critics who believe it can violate users' data privacy and artist rights.

Some users have become concerned about similar photo-altering apps and how they retain their data after submitting their photos.

According to Prisma’s terms and conditions, users:

“retain all rights in and to your user content.”

Anyone using the app is essentially consenting to give the company ownership of the digital artwork created through the app.

Prisma's terms further states:

“Perpetual, revocable, nonexclusive, royalty-free, worldwide, fully-paid, transferable, sub-licensable license to use, reproduce, modify, adapt, translate, create derivative works.”

Additionally, apart from Lensa's terms and conditions, its privacy policy states it:

"Does not use photos you provide….for any reason other than to apply different stylized filters or effects to them.”

Critics were concerned the company deletes generated AI images from its cloud services but not until after using them to train its AI.

The viral app uses Stable Diffusion, a text-to-model image generator essentially "'trained' to learn patterns through an online database of images called LAION-5B," according to Buzzfeed News.

"Once the training is complete, it no longer pulls from those images but uses the patterns to create more content. It then 'learns' your facial features from the photos you upload and applies them to that art."

For many artists, the source of the knowledge is the point of contention as LAION-5B uses publicly available images without compensation from sites like Google Images, DeviantArt, Getty Images and Pinterest.

An anonymous digital artist told the media that "This is about theft."

"Artists dislike AI art because the programs are trained unethically using databases of art belonging to artists who have not given their consent."

Many Twitter users voiced their concerns online.

Another problematic area is the tendency for the AI to create images sexualizing women and ones that are racially inaccurate–basically anglicizing people of color.

The CEO of Prisma Labs emphasized that it:

“does not have the same level of attention and appreciation for art as a human being.”

Here are some examples of where similar apps can actually perpetuate stereotypes and cause harm by doing so.

Cybersecurity expert Andrew Couts–a senior editor of security at Wired and oversees privacy policy, national security and surveillance coverage–told Good Morning America it's impossible to know what happens to the images uploaded to the app.

"It's impossible to know, without a full audit of the company's back-end systems, to know how safe or unsafe your pictures may be."

"The company does claim to 'delete' face data after 24 hours and they seem to have good policies in place for their privacy and security practices."

Couts added he wasn't too worried about the photos since most people's faces are already available on their social media pages.

However, he added:

"The main thing I would be concerned about is the behavioral analytics that they're collecting."

"If I were going to use the app, I would make sure to turn on as restrictive privacy settings as possible."

He further encouraged users to tighten restriction settings on their smartphones.

"You can change your privacy settings on your phone to make sure that the app isn't collecting as much data as it seems to be able to."

"And you can make sure that you're not sharing images that contain anything more private than just your face."

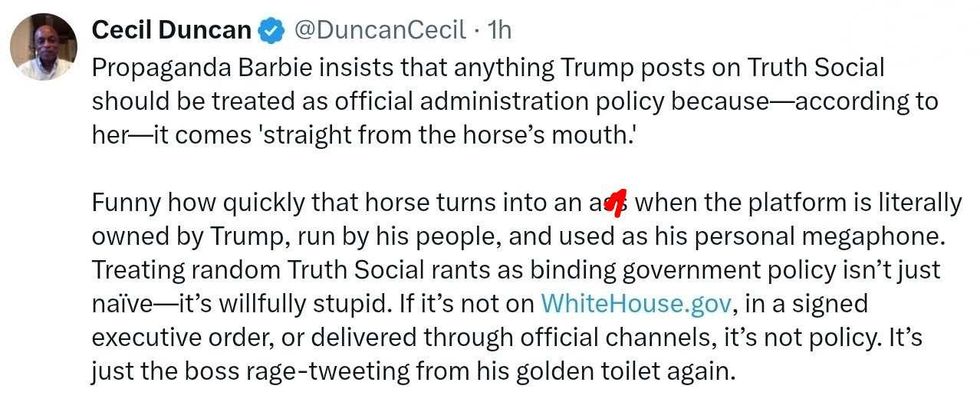

@DuncanCecil/X

@DuncanCecil/X @@realDonaldTrump/Truth Social

@@realDonaldTrump/Truth Social @89toothdoc/X

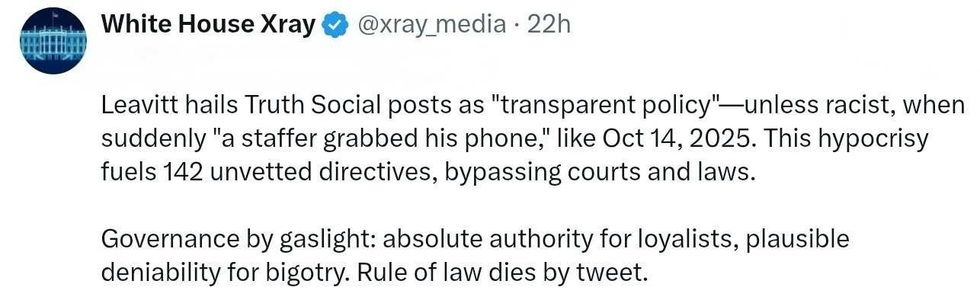

@89toothdoc/X @xray_media/X

@xray_media/X @CHRISTI12512382/X

@CHRISTI12512382/X

@sza/Instagram

@sza/Instagram @laylanelli/Instagram

@laylanelli/Instagram @itssharisma/Instagram

@itssharisma/Instagram @k8ydid99/Instagram

@k8ydid99/Instagram @8thhousepath/Instagram

@8thhousepath/Instagram @solflwers/Instagram

@solflwers/Instagram @msrosemarienyc/Instagram

@msrosemarienyc/Instagram @afropuff1/Instagram

@afropuff1/Instagram @jamelahjaye/Instagram

@jamelahjaye/Instagram @razmatazmazzz/Instagram

@razmatazmazzz/Instagram @sinead_catherine_/Instagram

@sinead_catherine_/Instagram @popscxii/Instagram

@popscxii/Instagram