An Artificial Intelligence expert warned that an open letter signed by Twitter CEO Elon Musk asking to "pause" further training of advanced AI tech models understated a potential risk of human extinction.

U.S. Decision Theorist Eliezer Yudkowsky wrote a Time op-ed explaining that a six-month moratorium on AI further developments was not enough and that it needed to shut down altogether, otherwise, he feared, "everyone on earth will die."

Yudkowsky, who leads research at the Machine Intelligence Research Institute and has been working on aligning AI since 2001, refrained from signing the open letter that called for:

“...all AI labs to immediately pause for at least 6 months the training of AI systems more powerful than GPT-4.”

The letter published by the non-profit Future of Life Institute–which is primarily funded by Musk's charity grantmaking organization, Musk Foundation–included 1,100 signatures from other tech innovators such as Apple co-founder Steve Wozniak and the likes of former presidential candidate Andrew Yang and Skype co-founder Jaan Tallinn.

It stated that its goal was to “steer transformative technologies away from extreme, large-scale risks.”

The letter also read:

“Powerful AI systems should be developed only once we are confident that their effects will be positive and their risks will be manageable."

“This confidence must be well justified and increase with the magnitude of a system’s potential effect.”

Yudkowsky acknowledged that he respected those who signed the letter as it was "an improvement on the margin" and that it was better than having no moratorium at all.

However, he suggested the letter offered very little by way of solving the problem.

"The key issue is not 'human-competitive' intelligence (as the open letter puts it)," he wrote and further stated:

"It’s what happens after AI gets to smarter-than-human intelligence."

"Key thresholds there may not be obvious, we definitely can’t calculate in advance what happens when, and it currently seems imaginable that a research lab would cross critical lines without noticing."

He went on to claim:

"Many researchers steeped in these issues, including myself, expect that the most likely result of building a superhumanly smart AI, under anything remotely like the current circumstances, is that literally everyone on Earth will die."

"Not as in 'maybe possibly some remote chance,' but as in 'that is the obvious thing that would happen,'" asserted Yukowsky.

"It’s not that you can’t, in principle, survive creating something much smarter than you; it’s that it would require precision and preparation and new scientific insights, and probably not having AI systems composed of giant inscrutable arrays of fractional numbers."

People who found the argument confusing shared their thoughts and also slammed Musk for adding his signature demanding a pause on AI tech progress.

The AI expert asked us to dispense with preconceived notions of what characterizes a sentient "hostile superhuman" that exists on the internet and sends "ill-intentioned emails."

"Visualize an entire alien civilization, thinking at millions of times human speeds, initially confined to computers—in a world of creatures that are, from its perspective, very stupid and very slow."

He maintained that such an super intelligent entity "won't stay confined to computers for long".

"In today’s world you can email DNA strings to laboratories that will produce proteins on demand, allowing an AI initially confined to the internet to build artificial life forms or bootstrap straight to postbiological molecular manufacturing."

"If somebody builds a too-powerful AI, under present conditions, I expect that every single member of the human species and all biological life on Earth dies shortly thereafter."

Yudkowsky stressed that coming up with solutions to halt rapidly advanced AI tech models should have been addressed 30 years ago and that it cannot be solved in a six-month gap.

"It took more than 60 years between when the notion of Artificial Intelligence was first proposed and studied, and for us to reach today’s capabilities."

"Solving safety of superhuman intelligence—not perfect safety, safety in the sense of 'not killing literally everyone'—could very reasonably take at least half that long."

Achieving this, he implied, allows no room for error.

"The thing about trying this with superhuman intelligence is that if you get that wrong on the first try, you do not get to learn from your mistakes, because you are dead."

"Humanity does not learn from the mistake and dust itself off and try again, as in other challenges we’ve overcome in our history, because we are all gone."

Yudkowsky proposed that a moratorium on the development of powerful AI systems should be "indefinite and worldwide" with no exceptions, including for governments or militaries.

"Shut down all the large GPU clusters (the large computer farms where the most powerful AIs are refined). Shut down all the large training runs."

"Put a ceiling on how much computing power anyone is allowed to use in training an AI system, and move it downward over the coming years to compensate for more efficient training algorithms."

Skeptics of Yudkowsky's proposal added their two cents.

You can watch a discussion with Yudkowsky about AI and the end of humanity featured on Bankless Podcast below.

159 - We’re All Gonna Die with Eliezer Yudkowskyyoutu.be

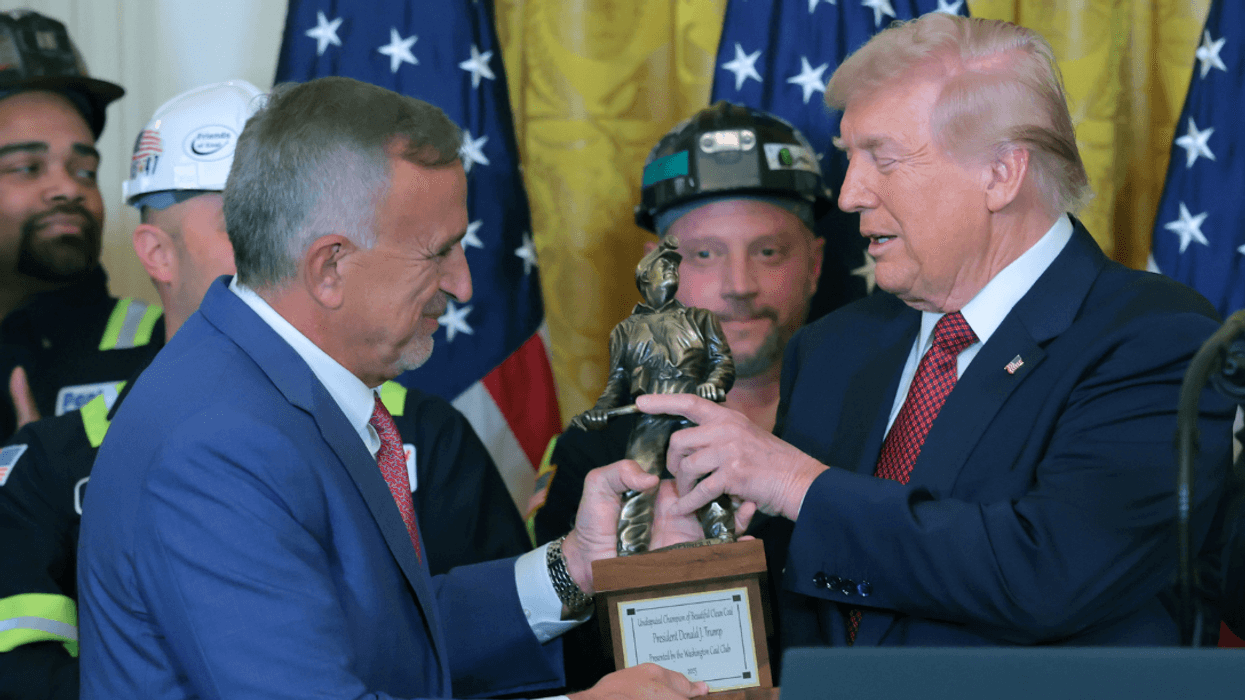

Roberto Schmidt/AFP via Getty Images

Roberto Schmidt/AFP via Getty Images

u/pizzaratsfriend/Reddit

u/pizzaratsfriend/Reddit u/Flat_Valuable650/Reddit

u/Flat_Valuable650/Reddit u/ReadyCauliflower8/Reddit

u/ReadyCauliflower8/Reddit u/RealBettyWhite69/Reddit

u/RealBettyWhite69/Reddit u/invisibleshadowalker/Reddit

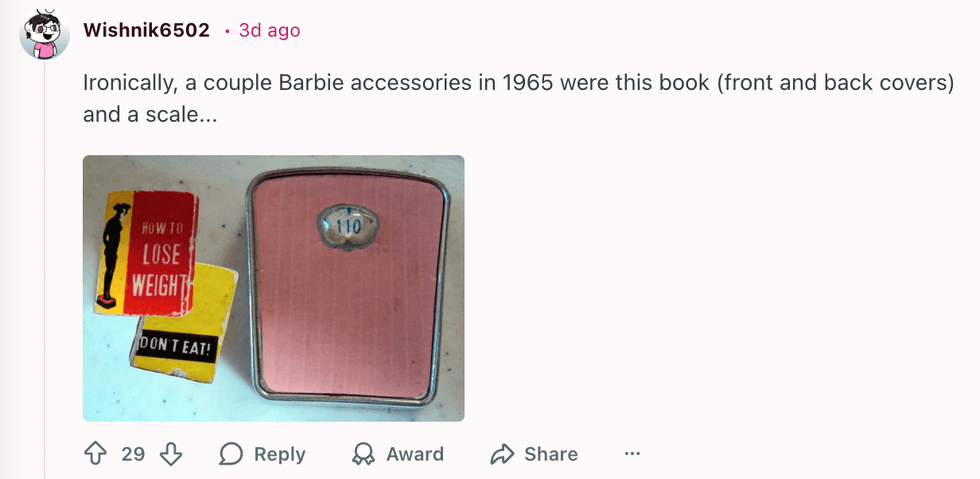

u/invisibleshadowalker/Reddit u/Wishnik6502/Reddit

u/Wishnik6502/Reddit u/kateastrophic/Reddit

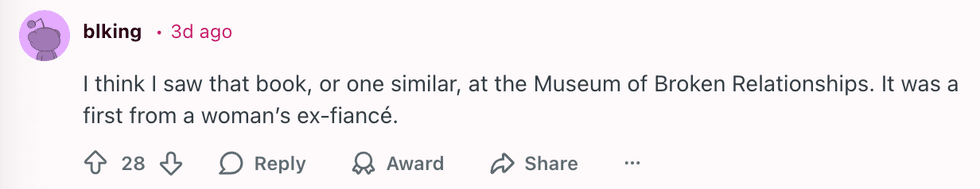

u/kateastrophic/Reddit u/blking/Reddit

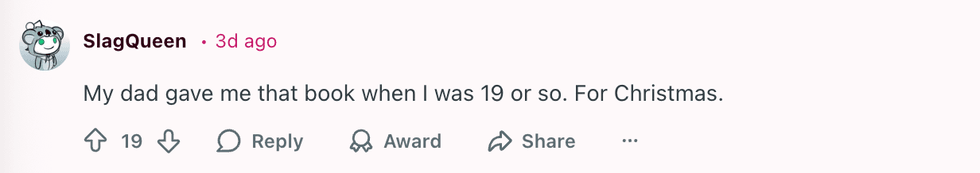

u/blking/Reddit u/SlagQueen/Reddit

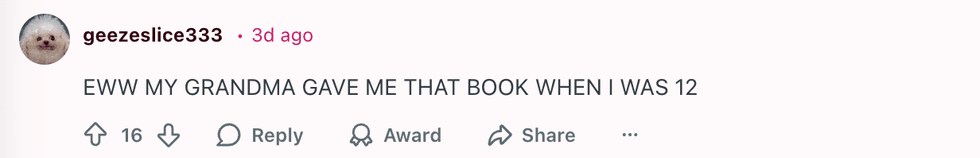

u/SlagQueen/Reddit u/geezeslice333/Reddit

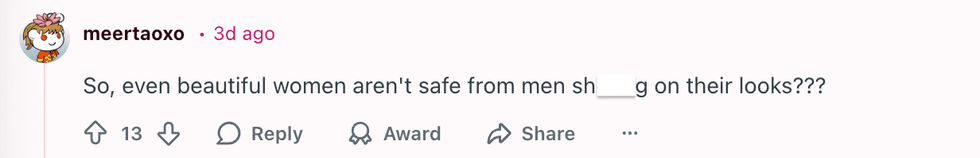

u/geezeslice333/Reddit u/meertaoxo/Reddit

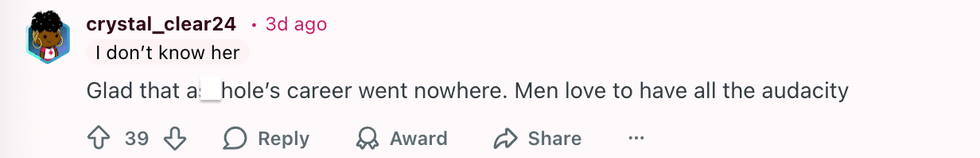

u/meertaoxo/Reddit u/crystal_clear24/Reddit

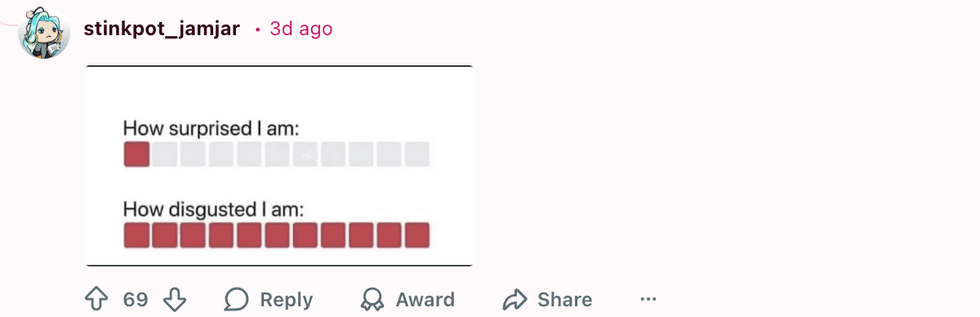

u/crystal_clear24/Reddit u/stinkpot_jamjar/Reddit

u/stinkpot_jamjar/Reddit

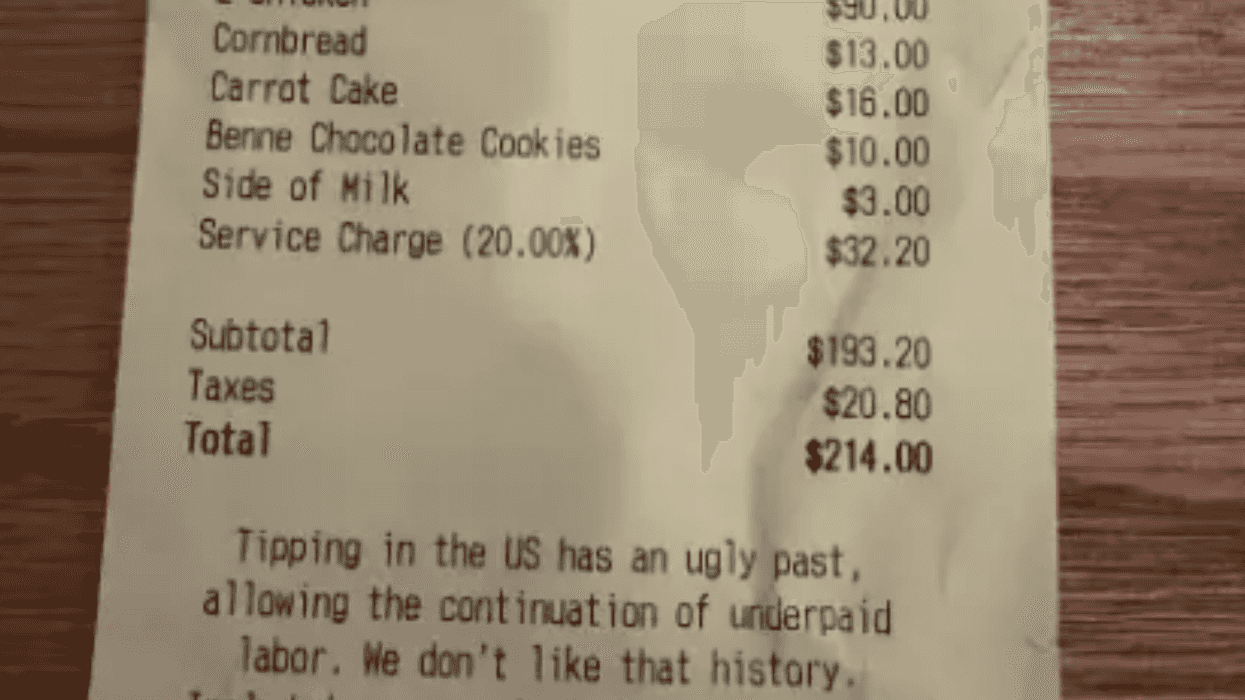

u/Bulgingpants/Reddit

u/Bulgingpants/Reddit

@hackedliving/TikTok

@hackedliving/TikTok @hackedliving/TikTok

@hackedliving/TikTok @hackedliving/TikTok

@hackedliving/TikTok @hackedliving/TikTok

@hackedliving/TikTok @hackedliving/TikTok

@hackedliving/TikTok @hackedliving/TikTok

@hackedliving/TikTok @hackedliving/TikTok

@hackedliving/TikTok @hackedliving/TikTok

@hackedliving/TikTok @hackedliving/TikTok

@hackedliving/TikTok @hackedliving/TikTok

@hackedliving/TikTok

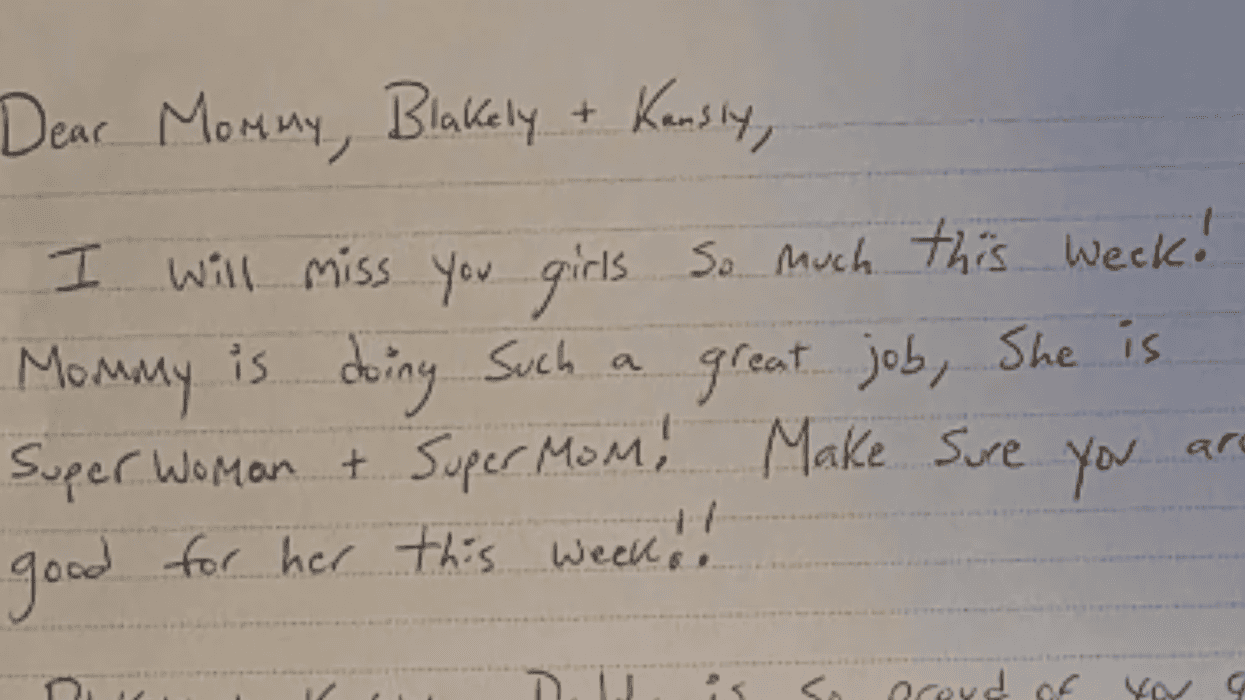

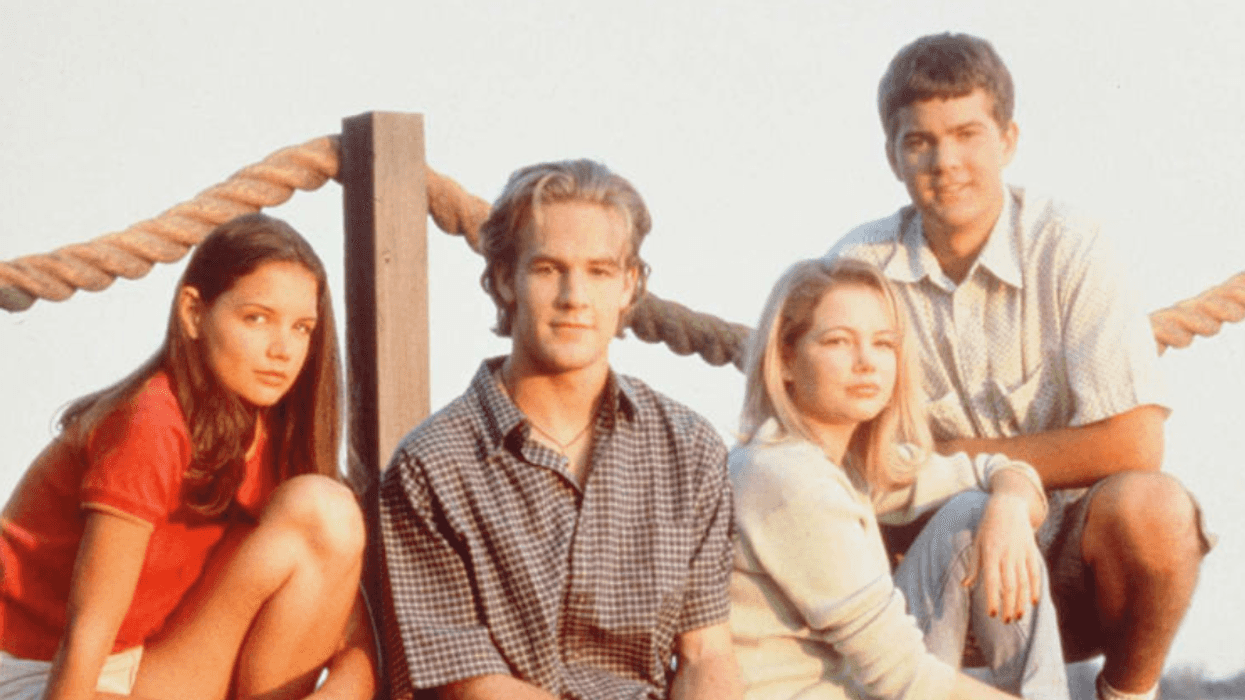

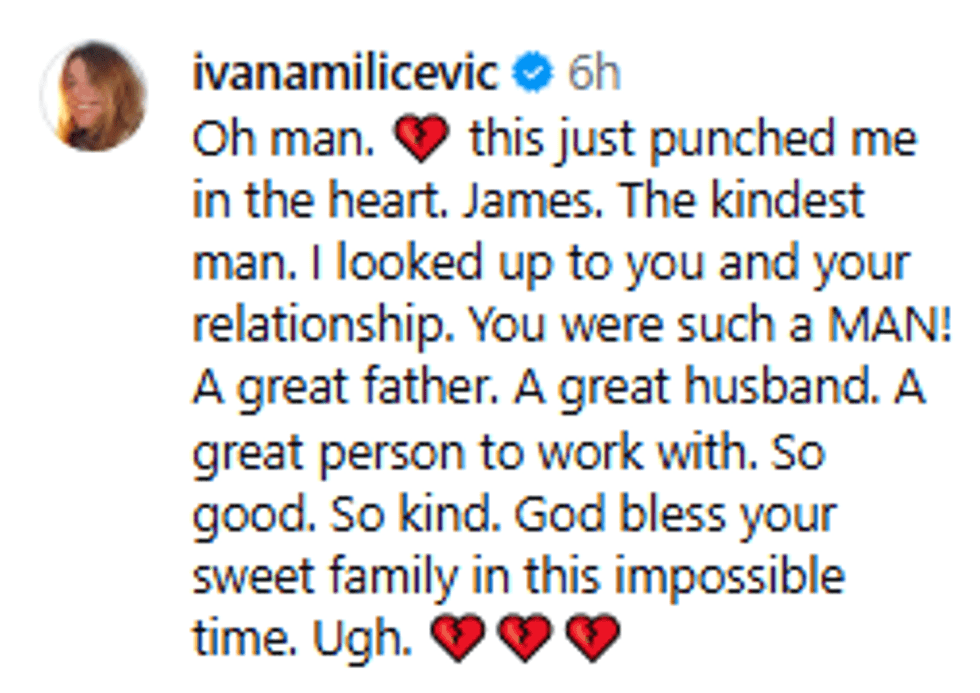

@vanderjames/Instagram

@vanderjames/Instagram @vanderjames/Instagram

@vanderjames/Instagram @vanderjames/Instagram

@vanderjames/Instagram @vanderjames/Instagram

@vanderjames/Instagram @vanderjames/Instagram

@vanderjames/Instagram @vanderjames/Instagram

@vanderjames/Instagram @vanderjames/Instagram

@vanderjames/Instagram @vanderjames/Instagram

@vanderjames/Instagram @vanderjames/Instagram

@vanderjames/Instagram @vanderjames/Instagram

@vanderjames/Instagram