Folks, the Will Smith AI spaghetti saga has officially entered its “oh no, this is starting to look real” era. What began as a punchline in 2023 has developed into one of the clearest examples of how quickly generative video models can go from uncanny to disturbingly convincing.

The story begins with a surreal viral clip posted in March 2023, when Reddit user /u/chaindrop used ModelScope’s text-to-video tool to create a strange rendering of Will Smith eating spaghetti. His face distorted unpredictably, extra fingers appeared mid-bite, and the noodles acted like glitching pixels. It became a signature artifact of early generative video and what AI couldn’t do...yet.

You can watch the original clip here:

from StableDiffusion

The internet, being the internet, quickly embraced the absurdity by remixing and parodying the complicated video across various platforms.

Even Will Smith joined in the fun in February 2024, sharing his own imitation of the clip.

He captioned the TikTok:

“This is getting out of hand!”

And as you can see, it is indeed getting out of hand:

@willsmith This is getting out of hand! #aivideo #sora

At the time, the “Will Smith Eating Spaghetti Test” became an informal benchmark in the AI community. If a model could render a person eating spaghetti—a deceptively complex mix of facial movement, hand coordination, texture physics, and audio—it was considered a sign of progress.

And for those who don’t know: Spaghetti is notoriously difficult for AI to animate because it involves unpredictable physics, overlapping textures, and constant facial movement, three things early models consistently failed to render.

For months, none of the models could do it convincingly. That has now changed.

This week, new videos created with Google’s Veo 3 and Veo 3.1 went viral for looking shockingly human. One clip shared by @AISearchIO features a smiling, lifelike Will Smith leaning into a bowl of spaghetti, camera gliding smoothly as noodles coil on the fork with near-perfect physics.

Posted in October, here's the updated version:

Will Smith in Veo 3.1 pic.twitter.com/SuK9jky3NW

— ⚡AI Search⚡ (@aisearchio) October 15, 2025

Another user, Javi Lopez, posted a separate take using the same tool. It looks even smoother, though the exaggerated slurping sound effects remain a giveaway.

Watch here:

Just got access to Veo 3 and the first thing I did was try the Will Smith spaghetti test. SOUND ON pic.twitter.com/y0CiZwNxgM

— Javi Lopez ⛩️ (@javilopen) May 22, 2025

The visuals are wild, but those crunchy crunch noises? Permanently lodged in my soul.

The improvements are significant. I mean, compared to the 2023 model’s jerky, Sims-like animations with plastic textures, today’s Veo 3.1 versions have smoother motion, more realistic lighting, and facial expressions that look way too human, without watermarks or bigger labels warning of AI usage.

Google’s Veo series, created by DeepMind, specializes in cinematic video generation and now includes native audio, competing directly with models like OpenAI’s Sora. Native audio generation helps these new clips feel more lifelike, even if the sound design is a little off.

Veo interprets motion frame-by-frame using a more advanced diffusion architecture, which is why these new clips display realistic eye focus, wrist rotation, and even subtle head tilts that were impossible for earlier models.

As one viewer noted:

Social media responded instantly, flooding timelines with jokes, disbelief, and warnings.

Although Hollywood has not yet been completely transformed, the industry is certainly side-eying the upgrades.

Across social media, modern AI technology has proven it can generate short scenes that mimic professional footage to the untrained eye. This raises important questions about digital doubles, reducing labor costs, automated stunt work, synthetic extras, and how studios will manage likeness rights from real human actors.

The Will Smith spaghetti prompt is a small example, but the direction of this technology is unmistakable: Entertainment could soon be created without cameras, sets, or even human performers. Advertisers and political strategists are watching as well, since AI-generated human likenesses can now be produced faster and cheaper than traditional video shoots.

Social media is already feeling the impact. Hyper-realistic AI clips spread faster than fact-checking can keep up, blurring the line between parody, persuasion, and misinformation.

Politicians, in particular, have taken notice. In Washington, lawmakers warn that if AI can convincingly make Will Smith eat spaghetti, it can just as easily fabricate videos of elected officials saying or doing things that never happened, a concern driving new pushes for synthetic media labeling laws ahead of the 2026 election cycle.

On TikTok, creators have circulated AI-generated clips of public officials endorsing policies they oppose or appearing in fictional scandals, all made with free tools. AI Trump videos are especially common, with fabricated speeches and reactions presented as authentic.

One example can be seen here:

@newslitproject Just because something is all over your feed doesn't mean it's legit - especially with the proliferation of AI-generated fakes. Take a moment & look it up yourself before spreading misinformation. #NewsLiteracy #MediaLiteracy #ArtificialIntelligence

In the end, the AI spaghetti meme was never really about pasta. It illustrates how quickly generative video is advancing, and provides a preview of the political and cultural challenges barreling toward us. The unsettling (and scary) truth is that AI is evolving faster than public understanding can keep pace with.

What once seemed like a harmless, glitchy joke now reads like a warning: Realistic synthetic media isn’t a future concern. It’s here.

u/alison_bee/Reddit

u/alison_bee/Reddit u/Trowj/Reddit

u/Trowj/Reddit

@newstalkfm/Instagram

@newstalkfm/Instagram @newstalkfm/Instagram

@newstalkfm/Instagram @newstalkfm/Instagram

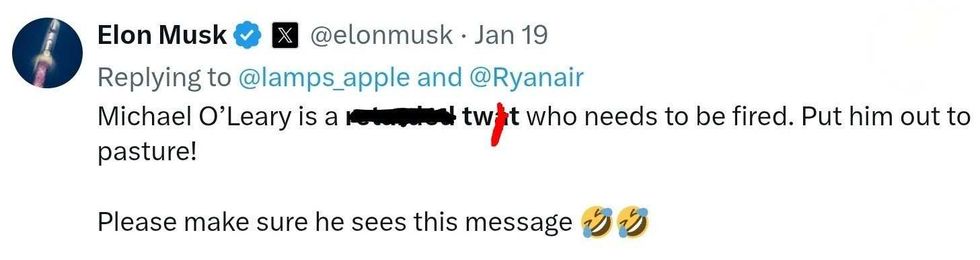

@newstalkfm/Instagram @elonmusk/X

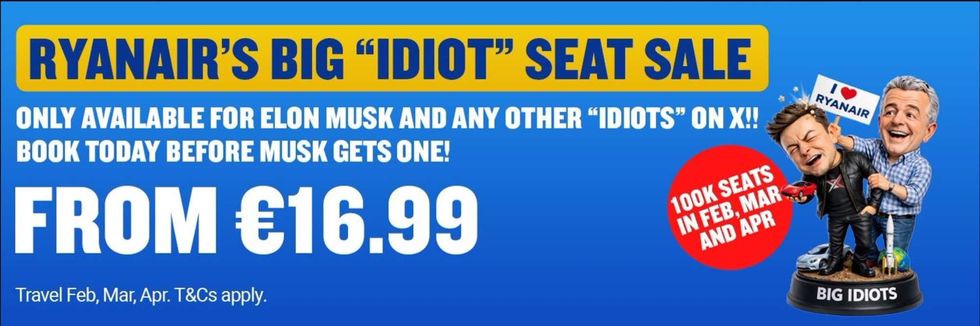

@elonmusk/X @Ryanair/X

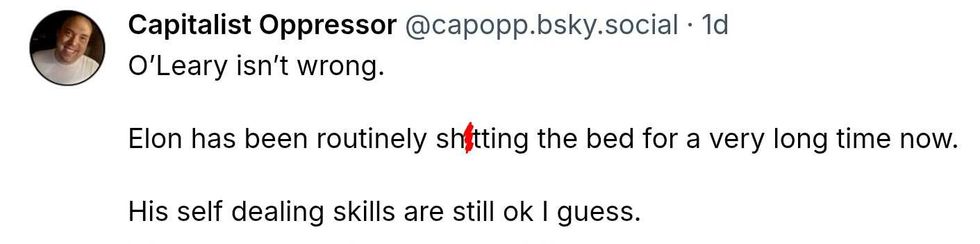

@Ryanair/X @capopp/Bluesky

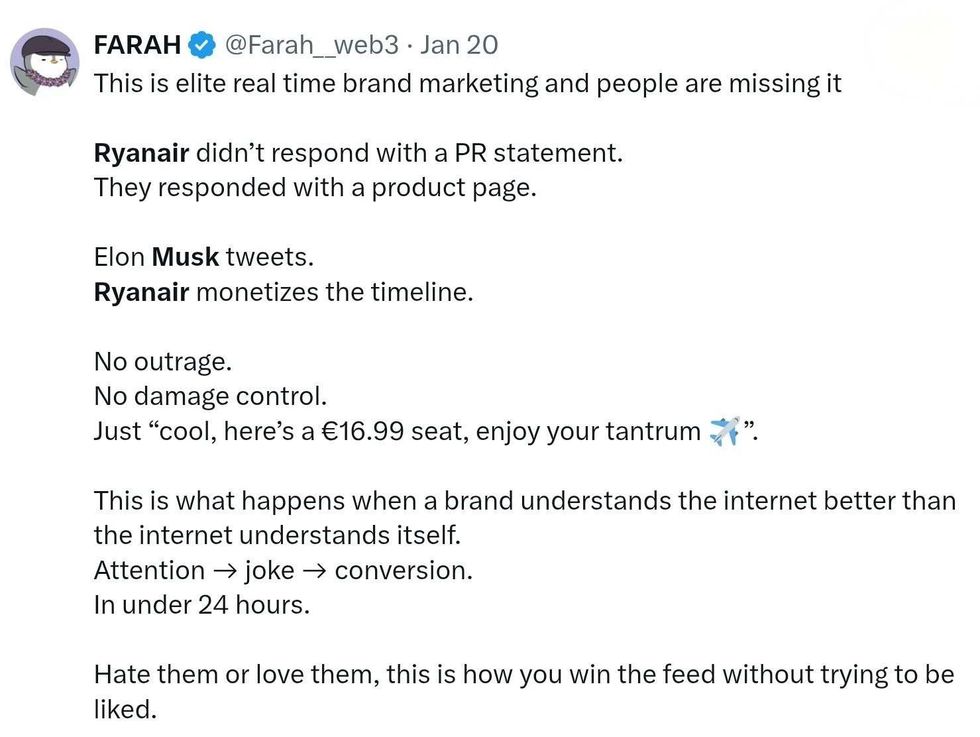

@capopp/Bluesky @Farah_web3/X

@Farah_web3/X @headdoc39/Bluesky

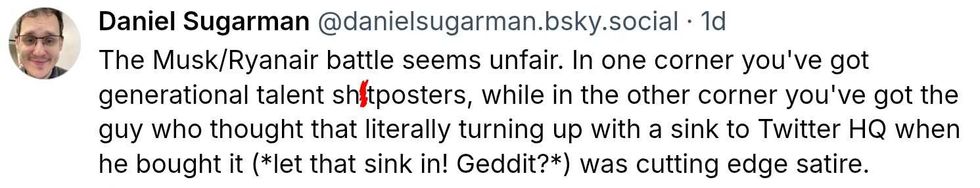

@headdoc39/Bluesky @danielsugarman/Bluesky

@danielsugarman/Bluesky

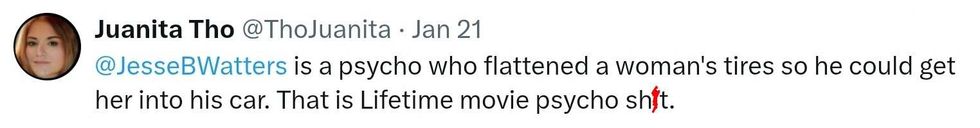

@ThoJuanita/X

@ThoJuanita/X

@shea_jordan/X

@shea_jordan/X