Twitter's use of algorithms to detect harmful speech has been successful in stifling ISIS propaganda on the site, but white supremacist content still runs rampant.

During a March meeting, a Twitter employee asked an executive a simple question: if Twitter could apply algorithms that successfully ban ISIS, why can't it do the same for white supremacy?

The answer is somewhat alarming.

Vice Motherboard reported on Thursday that Twitter programmers are concerned the algorithms used by the site to automatically weed out hate speech could flag the accounts of Republican politicians.

Yikes.

"Content from Republican politicians could get swept up by algorithms aggressively removing white supremacist material," Vice found, based on conversations between Twitter employees. "Banning politicians wouldn’t be accepted by society as a trade-off for flagging all of the white supremacist propaganda, he argued."

This is why Twitter refuses to suspend the accounts of political leaders whose posts technically violate the site's codes of conduct.

And although Twitter told Motherboard that this “is not [an] accurate characterization of our policies or enforcement—on any level," and has strict rules against “abuse and hateful conduct,” many in the public sphere feel the microblogging giant is not doing enough to combat white supremacy.

“We have policies around violent extremist groups,” Twitter CEO Jack Dorsey recently said in a TED interview, but declined to elaborate on why, for example, Nazis are still allowed to post.

Twitter's efforts to eradicate ISIS from its platform offers a clue.

According to experts interviewed by Motherboard, "the measures taken against ISIS were so extreme that, if applied to white supremacy, there would certainly be backlash, because algorithms would obviously flag content that has been tweeted by prominent Republicans—or, at the very least, their supporters."

This means that cracking down on racists could generate bad press, and Twitter certainly does not want that.

For many people, this is unacceptable.

This is not a good look for Twitter - or the Republican Party.

Additionally, Vice noted, there are far more white supremacists - who mostly support President Donald Trump - than there are ISIS sympathizers on Twitter.

“A very large number of white nationalists identify themselves as avid Trump supporters," JM Berger, author of Extremism, told Motherboard.

The company, therefore, fears alienating large groups of people.

“Cracking down on white nationalists will therefore involve removing a lot of people who identify to a greater or lesser extent as Trump supporters, and some people in Trump circles and pro-Trump media will certainly seize on this to complain they are being persecuted,” Berger said. “There's going to be controversy here that we didn't see with ISIS, because there are more white nationalists than there are ISIS supporters, and white nationalists are closer to the levers of political power in the US and Europe than ISIS ever was.”

The other stark difference between combating terrorism and white nationalism is the nuanced approach required for the latter.

“For terrorist-related content we've a lot of success with proprietary technology but for other types of content that violate our policies—which can often [be] much more contextual—we see the best benefits by using technology and human review in tandem,” the company said.

Berger added that unlike ISIS, white supremacists do not generally fit into one-size-fits-all molds.

“With ISIS, the group's obsessive branding, tight social networks and small numbers made it easier to avoid collateral damage when the companies cracked down (although there was some),” he said. “White nationalists, in contrast, have inconsistent branding, diffuse social networks and a large body of sympathetic people in the population, so the risk of collateral damage might be perceived as being higher, but it really depends on where the company draws its lines around content.”

Vice also asked Twitter and YouTube if they would follow the example set by Facebook, which banned white supremacist content last month.

Neither company would explicitly commit, and referred Vice to its rules.

“Twitter has a responsibility to stomp out all voices of hate on its platform,” Brandi Collins-Dexter, senior campaign director at activist group Color Of Change told Motherboard in a statement. “Instead, the company is giving a free ride to conservative politicians whose dangerous rhetoric enables the growth of the white supremacist movement into the mainstream and the rise of hate, online and off.”

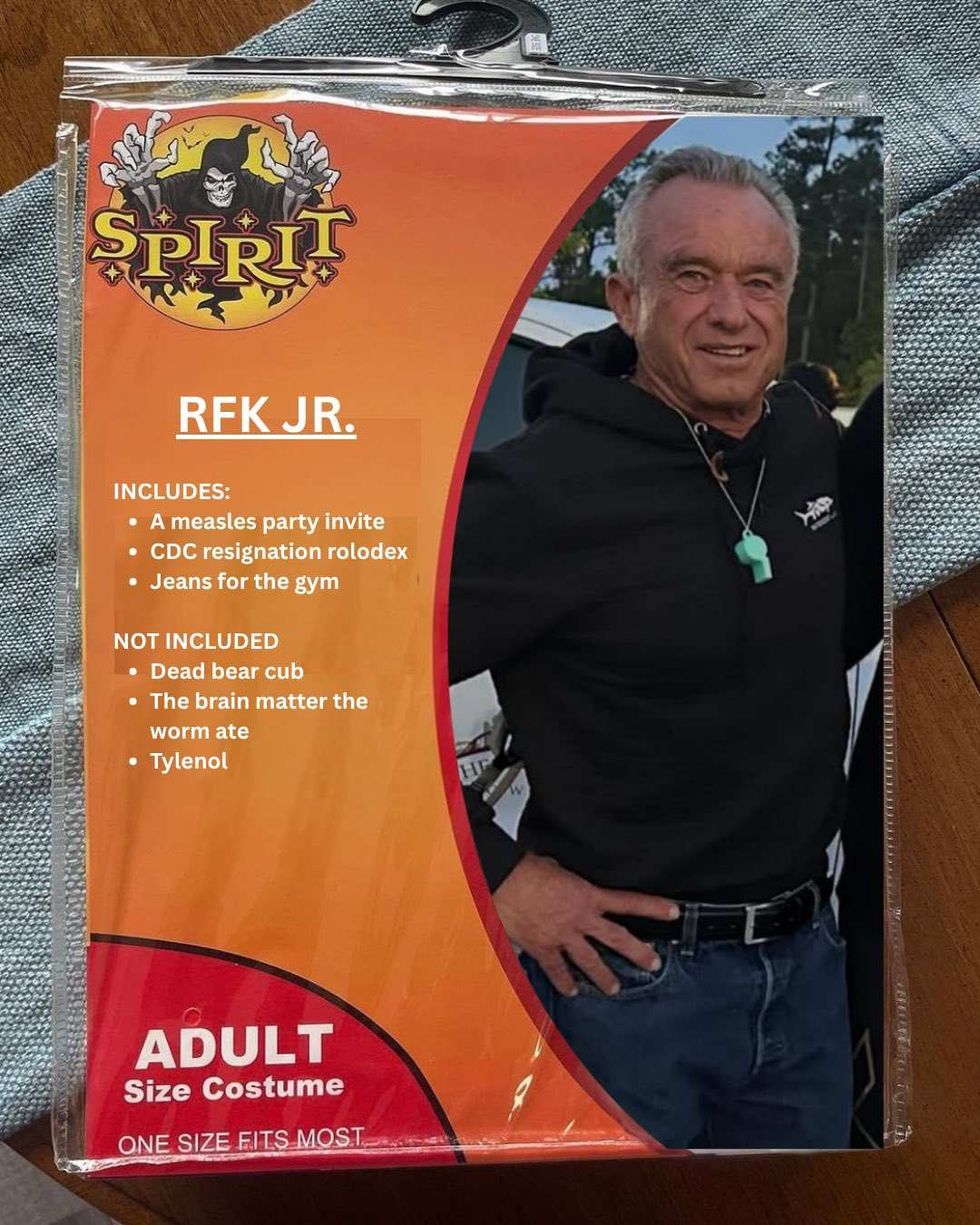

@vanessa_p_44/TikTok

@vanessa_p_44/TikTok @vanessa_p_44/TikTok

@vanessa_p_44/TikTok @vanessa_p_44/TikTok

@vanessa_p_44/TikTok @vanessa_p_44/TikTok

@vanessa_p_44/TikTok @vanessa_p_44/TikTok

@vanessa_p_44/TikTok

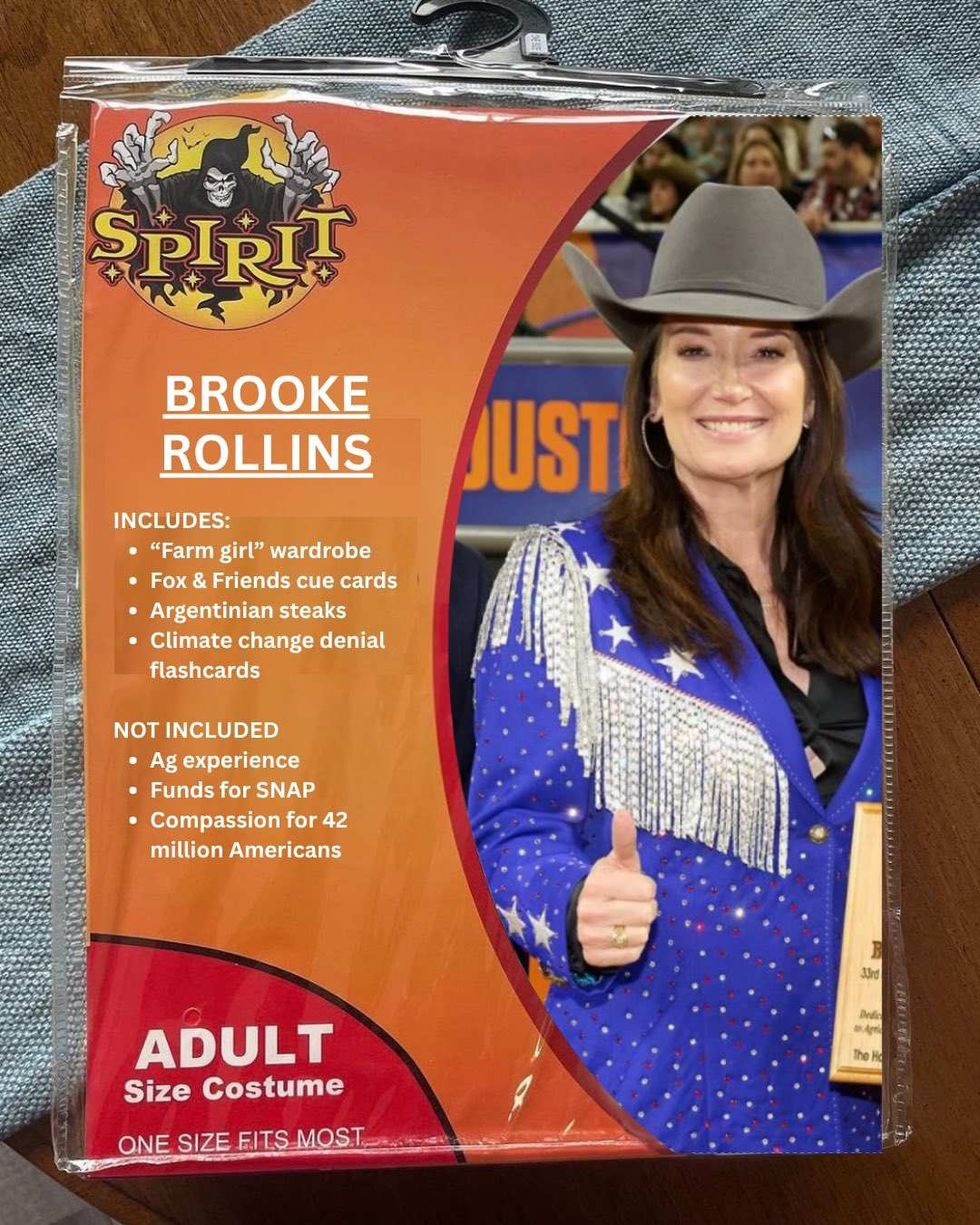

@GovPressOffice/X

@GovPressOffice/X @GovPressOffice/X

@GovPressOffice/X @GovPressOffice/X

@GovPressOffice/X @GovPressOffice/X

@GovPressOffice/X

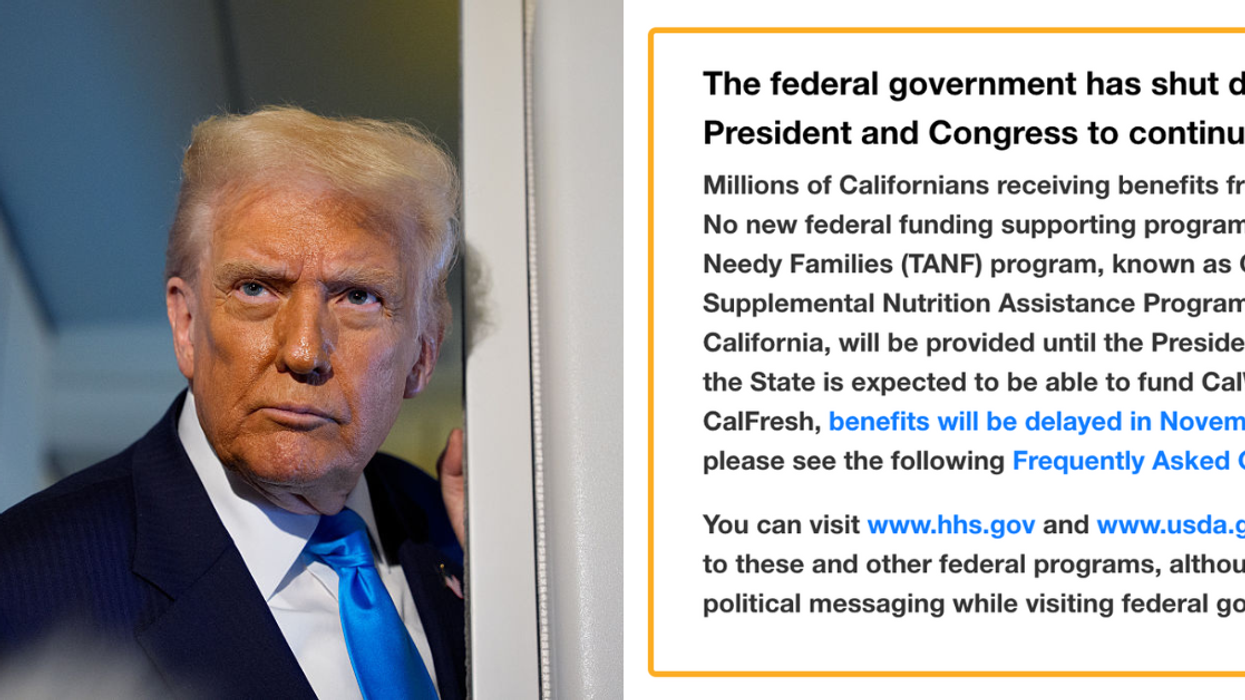

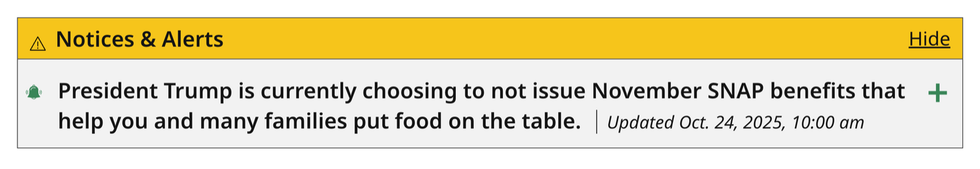

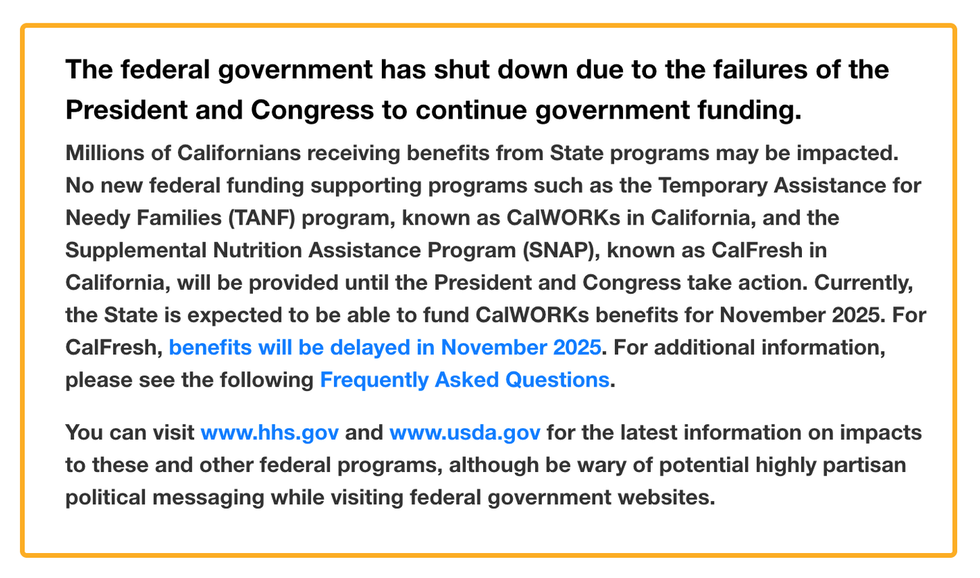

mass.gov

mass.gov cdss.ca.gov

cdss.ca.gov