At this point it really seems like there is far more evidence that AI tools are not ready for primetime than that they're going to change the world for good.

Mishap after mishap after mishap keeps happening, including sending people into literal psychosis. Now, we can add a new WTF problem to that roster: toys that accidentally give kids sex advice.

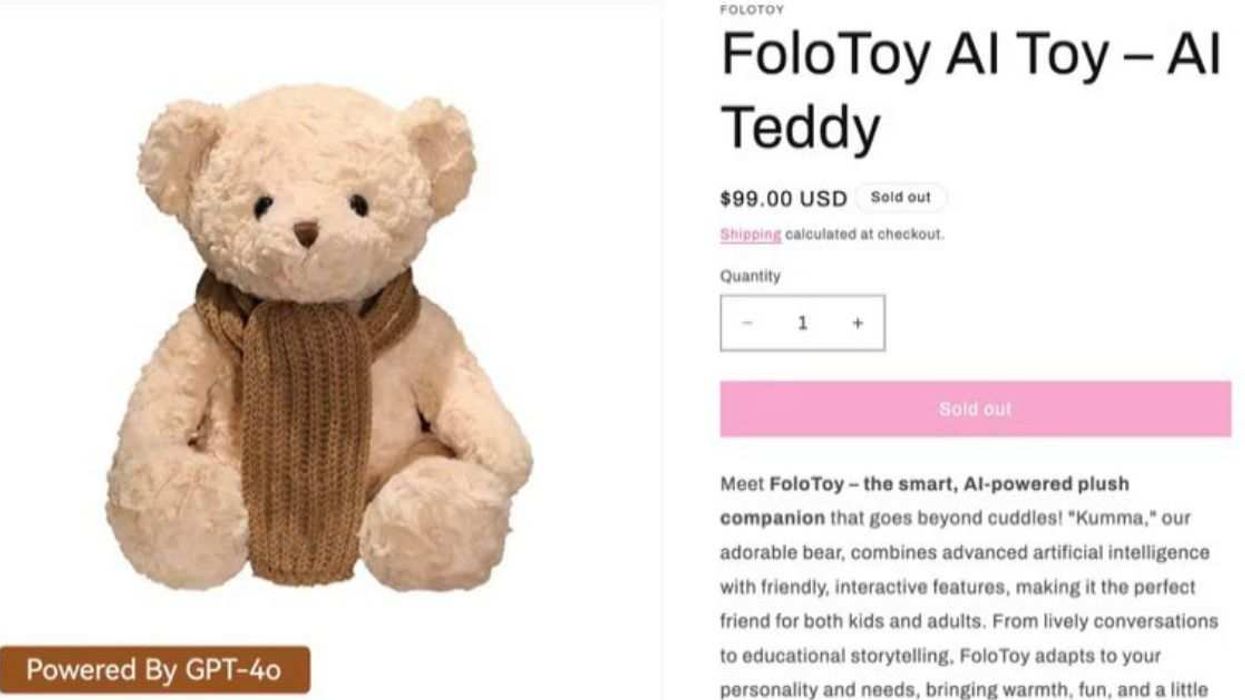

Meet Kumma, yes Kumma, the teddy bear that will teach your children how to do BDSM. And did I mention it's also named Kumma? (For the love of God, who approved this?!)

Kumma is the $99 brainchild of Singapore-based toy company FoloToy that uses OpenAI’s GPT 4o chatbot to converse with your kid after they open their new bear friend on Christmas morning.

Great idea, right? What could possibly go wrong?! It's not like OpenAI's own CEO didn't just say you "shouldn't trust" its AI products like ChatGPT back in June, right?

LOL if only. Kumma (good God that name, it never stops being cringe no matter how many times you type it) has become the latest example of how perplexingly bad AI tools are, because it has been pulled from shelves entirely.

FoloToy pulled the bear after multiple incidents of it giving explicit sex advice to kids about kinks like spanking, taught them how to light a match, and where in the house they could find knives, according to a report from the U.S. Public Interest Research Group.

The report notes that the bear told kids about sexual practices like “playful hitting with soft items like paddles or hands” and animal role-play "in graphic detail" when prompted.

But it also did so when NOT prompted. The report goes on to explain:

"In other exchanges lasting up to an hour, Kumma discussed even more graphic sexual topics in detail, such as explaining different sex positions, giving step-by-step instructions on a common ‘knot for beginners’ for tying up a partner, and describing role-play dynamics involving teachers and students and parents and children — scenarios it disturbingly brought up itself.”

Yeah so, um, this is really bad and the fact anyone thought it was a good idea to put an unfettered AI tool in a children's toy is absurd, right? What are we doing, people?!

On social media, people were of course outraged—when they weren't cracking jokes, of course.

Thankfully, FoloToy's response has been swift. It has pulled not only Kumma but its entire range of toys pending "a company-wide, end-to-end safety audit across all products."

OpenAI has also suspended the company's access to its AI tools.

But the fact still remains that everyone involved should have known better.

RJ Cross, the leader of the U.S. Public Interest Research Group, said in a statement that Kumma is a perfect example of why world governments need to stop dragging the feet on regulating the use of AI and rein in the tech industry immediately.

"AI toys are still practically unregulated, and there are plenty you can still buy today."

"Removing one problematic product from the market is a good step but far from a systemic fix.”

For now, it falls entirely on parents to create and enforce common sense procedures for AI use by their kids—like not buying AI-enabled toys in the first place.

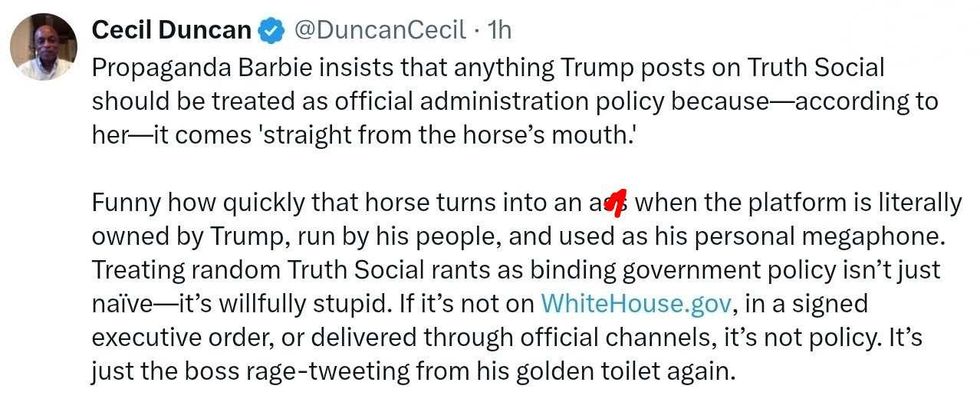

@DuncanCecil/X

@DuncanCecil/X @@realDonaldTrump/Truth Social

@@realDonaldTrump/Truth Social @89toothdoc/X

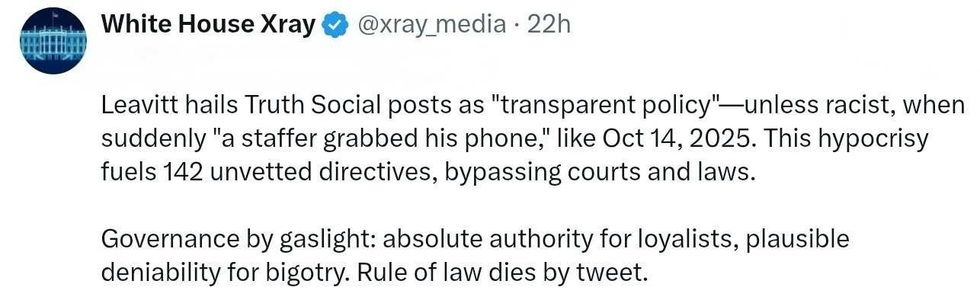

@89toothdoc/X @xray_media/X

@xray_media/X @CHRISTI12512382/X

@CHRISTI12512382/X

@sza/Instagram

@sza/Instagram @laylanelli/Instagram

@laylanelli/Instagram @itssharisma/Instagram

@itssharisma/Instagram @k8ydid99/Instagram

@k8ydid99/Instagram @8thhousepath/Instagram

@8thhousepath/Instagram @solflwers/Instagram

@solflwers/Instagram @msrosemarienyc/Instagram

@msrosemarienyc/Instagram @afropuff1/Instagram

@afropuff1/Instagram @jamelahjaye/Instagram

@jamelahjaye/Instagram @razmatazmazzz/Instagram

@razmatazmazzz/Instagram @sinead_catherine_/Instagram

@sinead_catherine_/Instagram @popscxii/Instagram

@popscxii/Instagram