There are many animals that can learn to understand our human language, and a team of college students appears to have brought us one step closer to life with Dug, the talking dog from 2009's Disney-Pixar's hit UpUp.

You remember Dug, don't you? He was pretty adorable.

Now get this: The team at the University of Illinois at Urbana-Champaign have developed a headset of electrodes that reads the neural responses of Alma, a labrador retriever, and then interprets that activity into speech. The students are all Disney and Pixar fans, too: The project is called "Dug the Dog IRL" (Dug the Dog In Real Life).

The students' idea behind their device is that Alma's thoughts and emotions will consistently fall along the same pattern in her canine brain. These patterns are then translated into short verbal messages.

In the video below, the students explain how they built the interface and how the device translates Alma's thoughts into pre-recorded vocal messages.

Alma the Talking Dog - Dug the Dog IRLyoutu.be

Team lead Jessica Austriaco says:

"Our brains and dogs' brains produce electrical activity. We can build tools to measure this activity in the form of EEG. Although we can't translate dog speech directly into English, we used EEG to map Alma's brain in response to different stimuli."

Signal processing lead Bliss Chapman chimes in and together, he and Austriaco explained how the team created the custom electrodes:

Our custom electrodes are 3D-printed and painted with a coating of nickel paint to make them conductive. Throughout the project, we modeled three different iterations of electrodes.

Each electrode has short spikes that reach through Alma's fluffy fur without causing her discomfort. Wires are connected to the electrodes through holes in the back, and those connections are solidified with conductive paint. Two 3D-printed electrodes are sewn into a headband, and that headband is secured with an elastic chin strap in order to keep the electrodes tightly pressed onto Alma's head.

One electrode is used as ground measurement and it's attached to Alma's ear. The wires are then braided and coated in conductive paint in order to reduce interference from other circuits and also Alma's muscle activity. Wires run down Alma's back into a custom engineered analog circuit mounted on a harness. The circuit amplifies the waveform and performs low and high pass filtering on the signal.

They note that an Arduino reads the data from an analog pin and writes the data to a serial port...

...and that the Raspberry Pi "reads data in from the Arduino and performs machine learning classification on the Fourier transform [decomposes a function of time into the frequencies that make it up] of the last two seconds of data."

"If the machine learning algorithm classifies Alma's neural response as 'treat,' Chapman says, "then the Raspberry Pi triggers a pre-recorded Alma voice to play out of the speaker."

Sure enough, at one point, Alma becomes excited when she's about to be fed a doggie treat. The device reads her responses and the speaker delivers the following response: "Oh! Treat! Treat! Yes, I want the treat. I do so definitely want the treat. I would be very happy if I were to have the treat!"

The students will present their work at the University of Illinois's Engineering Open House.

Impressive.

We're excited to see how this develops.

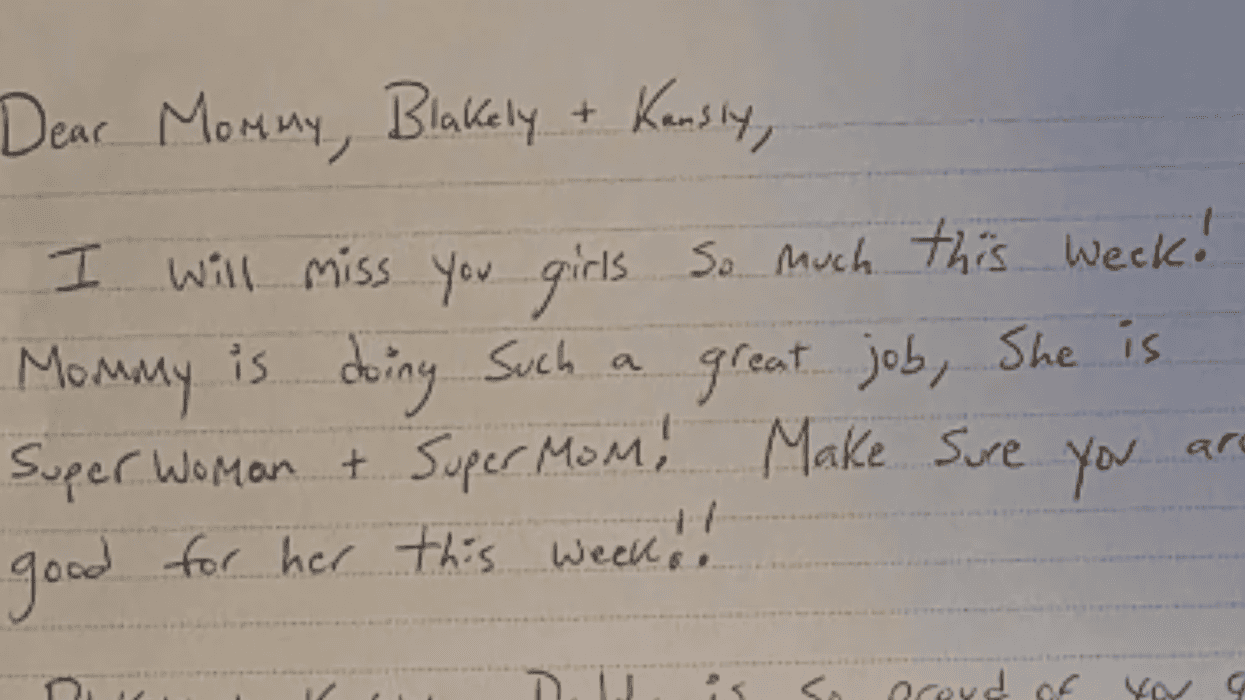

@jennifer.garner/Instagram

@jennifer.garner/Instagram @jennifer.garner/Instagram

@jennifer.garner/Instagram @jennifer.garner/Instagram

@jennifer.garner/Instagram @jennifer.garner/Instagram

@jennifer.garner/Instagram @jennifer.garner/Instagram

@jennifer.garner/Instagram @jennifer.garner/Instagram

@jennifer.garner/Instagram @jennifer.garner/Instagram

@jennifer.garner/Instagram @jennifer.garner/Instagram

@jennifer.garner/Instagram @jennifer.garner/Instagram

@jennifer.garner/Instagram @jennifer.garner/Instagram

@jennifer.garner/Instagram @jennifer.garner/Instagram

@jennifer.garner/Instagram @jennifer.garner/Instagram

@jennifer.garner/Instagram @jennifer.garner/Instagram

@jennifer.garner/Instagram @jennifer.garner/Instagram

@jennifer.garner/Instagram @jennifer.garner/Instagram

@jennifer.garner/Instagram @jennifer.garner/Instagram

@jennifer.garner/Instagram @jennifer.garner/Instagram

@jennifer.garner/Instagram @jennifer.garner/Instagram

@jennifer.garner/Instagram @jennifer.garner/Instagram

@jennifer.garner/Instagram @jennifer.garner/Instagram

@jennifer.garner/Instagram

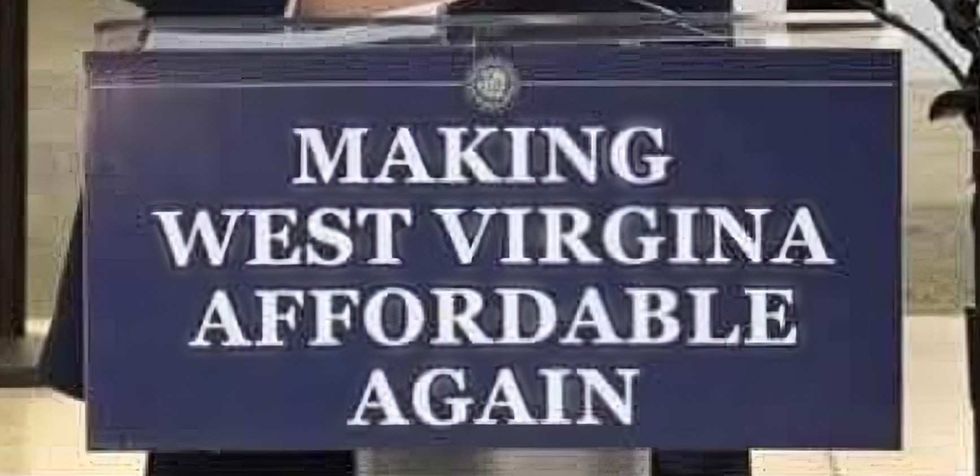

@ameliaknisely/X

@ameliaknisely/X WDTV 5 News/Facebook

WDTV 5 News/Facebook r/WestVirginia/Reddit

r/WestVirginia/Reddit WDTV 5 News/Facebook

WDTV 5 News/Facebook r/WestVirginia/Reddit

r/WestVirginia/Reddit r/WestVirginia/Reddit

r/WestVirginia/Reddit WDTV 5 News/Facebook

WDTV 5 News/Facebook r/WestVirginia/Reddit

r/WestVirginia/Reddit r/WestVirginia/Reddit

r/WestVirginia/Reddit WDTV 5 News/Facebook

WDTV 5 News/Facebook WDTV 5 News/Facebook

WDTV 5 News/Facebook