Machines are often thought to be above reproach when it comes to bias of any kind. They don't have the same social hangups that encumber humans.

But is that actually true?

Congresswoman Alexandria Ocasio-Cortez used her five minutes during a House Oversight Committee Hearing last week to call attention to biases literally programmed into certain forms of technology, especially—as Ocasio-Cortez pointed out—in facial recognition technology.

In questioning the founder of the Algorithmic Justice League, Joy Buolamwini, Ocasio-Cortez asked for information regarding the demographic that's primarily creating these algorithms, and who these algorithms are designed to recognize.

Watch below:

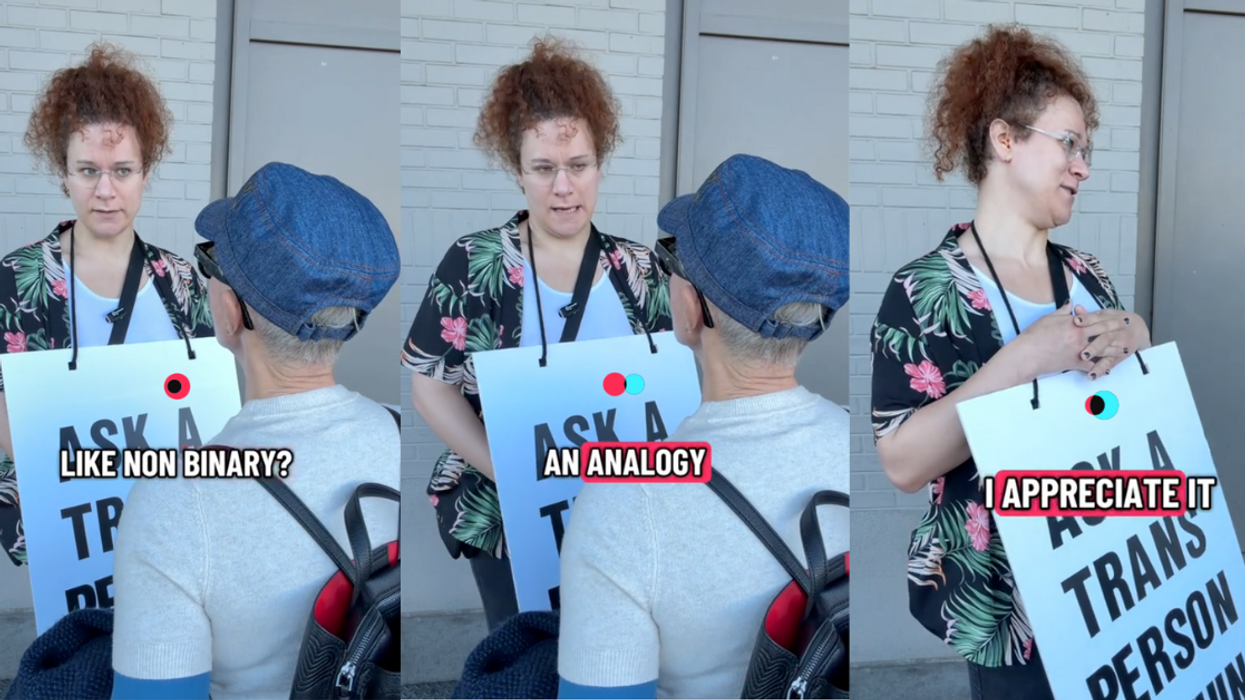

After Buolamwini confirmed for Ocasio-Cortez that facial recognition algorithms are less reliable at identifying women, people of color, and transgender individuals, Buolamwini went on to point out that these algorithms are primarily calculated by white cisgender men and subsequently identify them more reliably.

Ocasio-Cortez responded:

"So, we have a technology that was created and designed by one demographic that is only mostly effective on that one demographic and they're trying to sell it and impose it on the entirety of the country?"

"We have the pale male data sets being used as something universal when that's actually not the case," Buolamwini confirmed.

The use of facial recognition technology is growing rapidly, especially within law enforcement agencies like the FBI. Large tech companies are courting these agencies in a race for whose technology can be perfected first.

However, the use of facial recognition technology to identify potential criminals—unless corrected to include people of color, trans people, and women—could lead to misidentification and wrongful imprisonment of marginalized communities.

People were cheering the Congresswoman's line of questioning.

Kudos, Congresswoman.

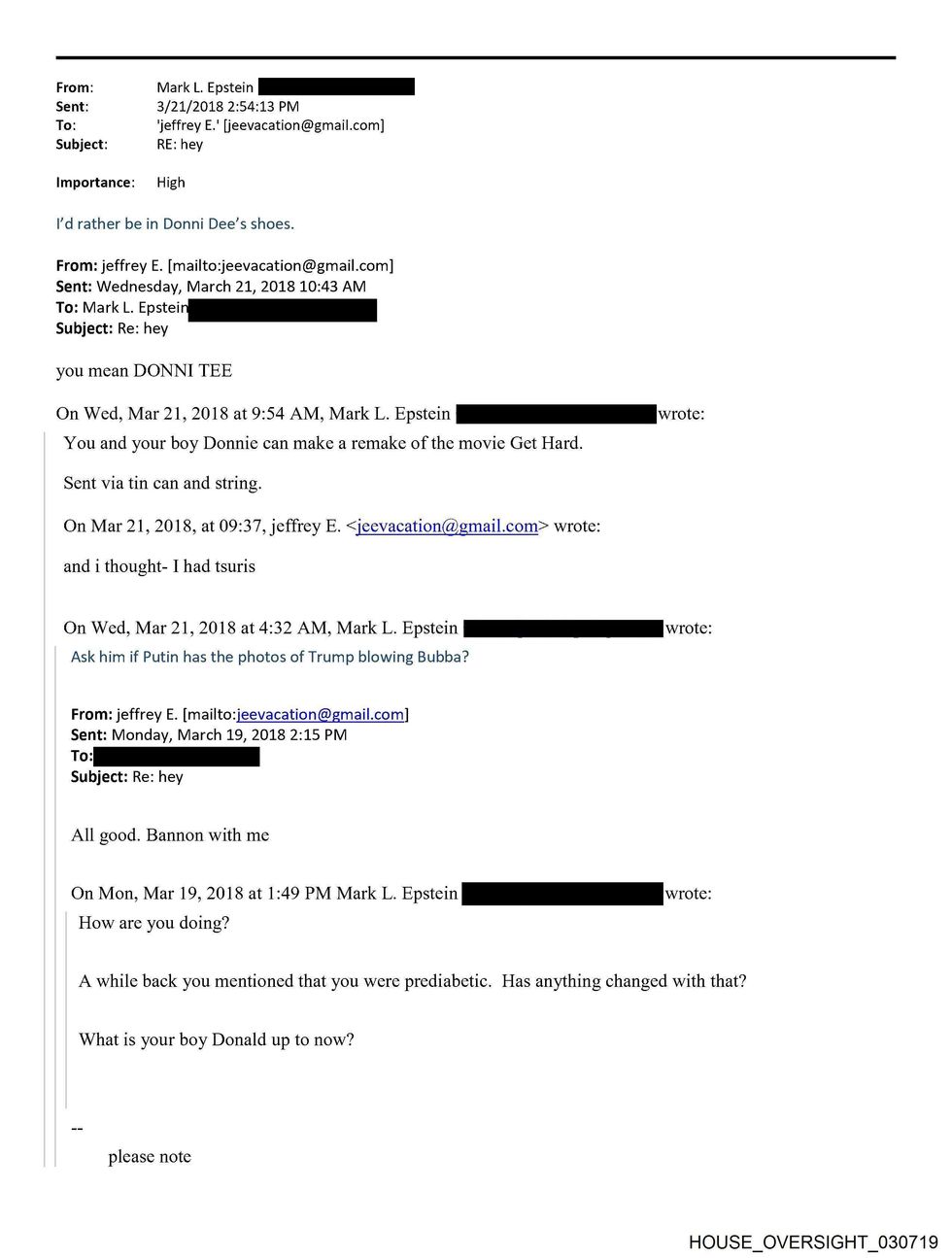

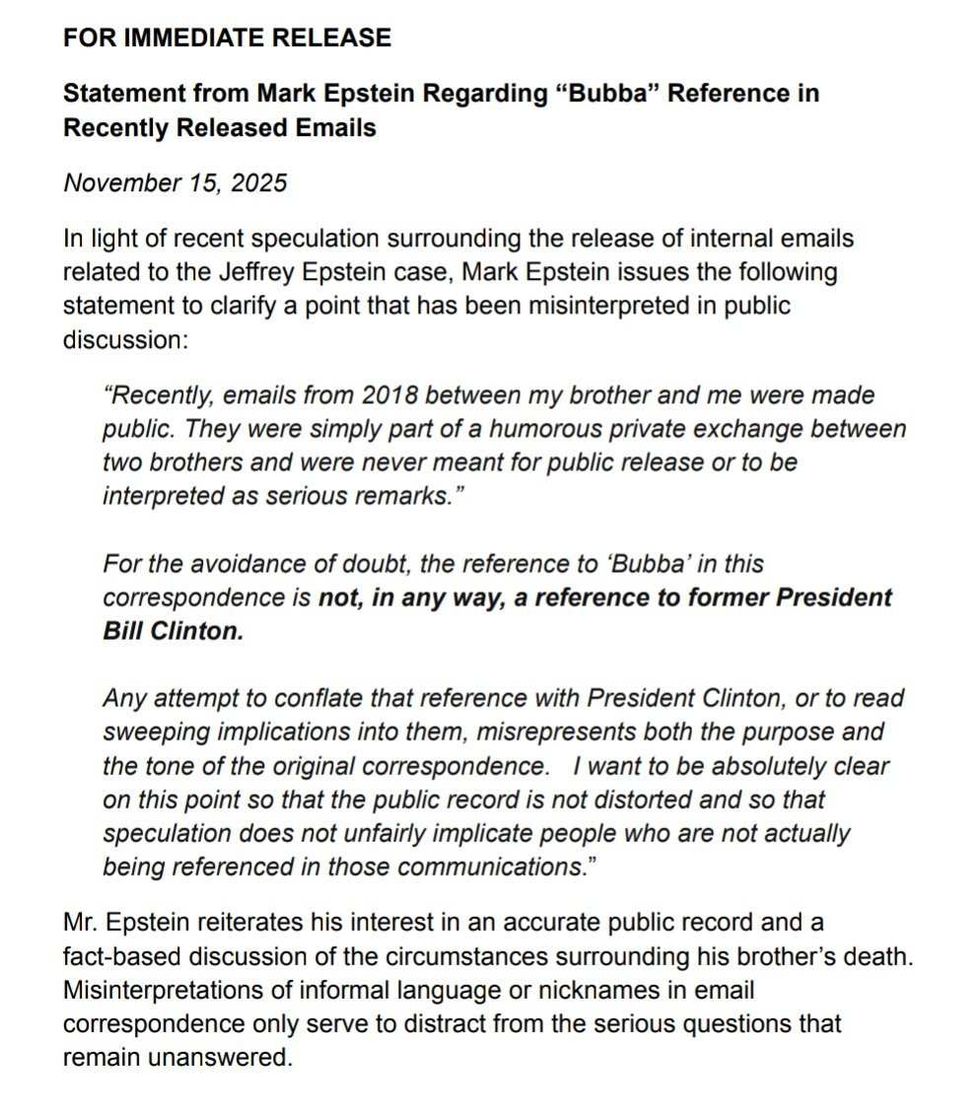

House Oversight Committee

House Oversight Committee @JayShamsX

@JayShamsX

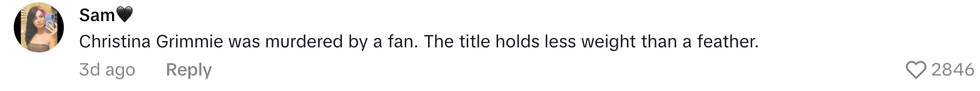

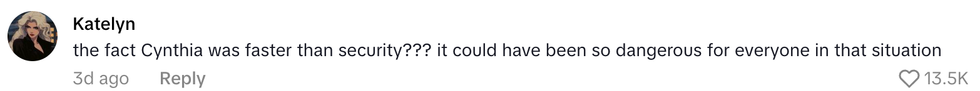

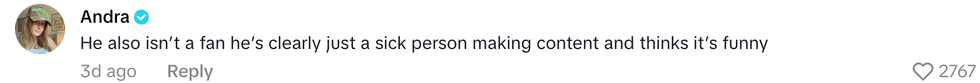

@ceoofhoesmad/TikTok

@ceoofhoesmad/TikTok @showmeurmoomoo/TikTok

@showmeurmoomoo/TikTok @cablehoe/TikTok

@cablehoe/TikTok @samanthagibson46/TikTok

@samanthagibson46/TikTok @ikranamoktoyu/TikTok

@ikranamoktoyu/TikTok @hopeyoufindyourdad/TikTok

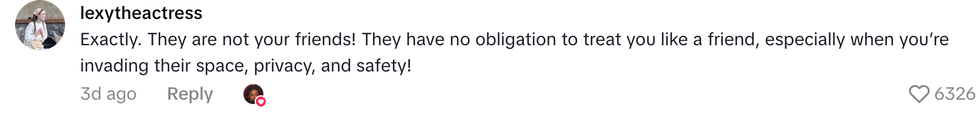

@hopeyoufindyourdad/TikTok @lexytheactress/TikTok

@lexytheactress/TikTok @athenasworld95/TikTok

@athenasworld95/TikTok @kelsibell24/TikTok

@kelsibell24/TikTok @sydneywynder/TikTok

@sydneywynder/TikTok @vickbash/TikTok

@vickbash/TikTok @xp.etros/TikTok

@xp.etros/TikTok

@horselaugh/Bluesky

@horselaugh/Bluesky @itsafronomics/Bluesky

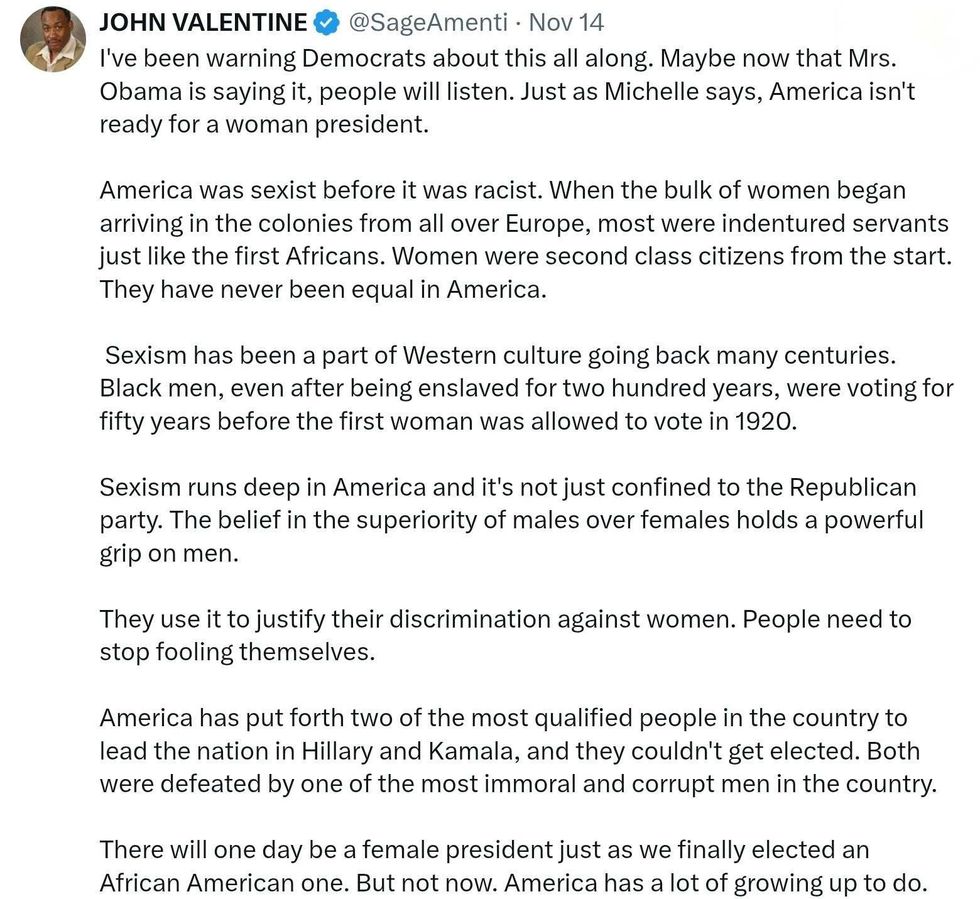

@itsafronomics/Bluesky @SageAmenti/X

@SageAmenti/X

Glen Powell/NBC

Glen Powell/NBC Glen Powell/NBC

Glen Powell/NBC @nbcsnl/Instagram

@nbcsnl/Instagram @nbcsnl/Instagram

@nbcsnl/Instagram @nbcsnl/Instagram

@nbcsnl/Instagram @nbcsnl/Instagram

@nbcsnl/Instagram @nbcsnl/Instagram

@nbcsnl/Instagram @nbcsnl/Instagram

@nbcsnl/Instagram @nbcsnl/Instagram

@nbcsnl/Instagram @nbcsnl/Instagram

@nbcsnl/Instagram