Artificial intelligence technology has made great strides of late, showing it can perform not just credit worthiness and shopping-behavior algorithms, but interpret what animals are saying, create nude art and even write the next installment of Game of Thrones.

However, it turns out machine learning is missing a critical component of human thought: the ability to not be sexist and racist.

Artificial intelligence has been a boon for industries such as insurance, banking and criminal justice, allowing companies and organizations to quickly and efficiently synthesize large amounts of data to determine who’s most likely to file a claim or default on a loan or in which neighborhoods a crime is more likely to occur.

However, biased results have been a growing problem. For instance, a ProPublica investigation of COMPAS (Correctional Offender Management Profiling for Alternative Sanctions), an AI algorithm frequently used in the U.S., found that the algorithm thought black people posed a higher recidivism risk than has been the case. In a different investigation by the Human Rights Data Analysis Group, crime-predicting software was found frequently sending police to low-income neighborhoods regardless of the actual rate of crime.

Many claim the problem is with the input data, not the AI itself.

“In machine learning, we have this problem of racism in and racism out,” says Chris Russell of the Alan Turing Institute in London.

A group of German researchers hopes to change that, with a new approach to keep bias out of the process of training algorithms. Niki Kilbertus of the Max Planck Institute for Intelligent Systems, and lead researcher for the project, points out that AI learns through patterns, without taking into account sensitive information such as the color of someone’s skin making them more likely to be arrested in the first place, or denied a job that could make them more credit-worthy.

“Loan decisions, risk assessments in criminal justice, insurance premiums, and more are being made in a way that disproportionately affects sex and race,” Kilbertus told New Scientist.

Due to discrimination laws and/or individuals’ reluctance to reveal their sex, race or sexuality, companies in the past have faced barriers to looking at such sensitive data, so Kilbertus’ group’s fix includes privately encrypting this information for companies using AI software. An independent regulator would then be able to check the sensitive information against the AI outputs and, if determined to be unbiased, issue a fairness certificate indicating all variables have been considered.

While the results of Kilbertus’ group’s proposal have yet to be seen, few would argue that there’s still much work to be done in adapting artificial intelligence to our increasingly complicated world.

“What’s at stake when we don’t have a greater understanding of racist and sexist disparities goes far beyond public relations snafus and occasional headlines,” Safiya Noble, author of Algorithms of Oppression, told Canadian newsmagazine The Walrus. “It means not only are companies missing out on new possibilities for deeper and more diverse consumer engagements, but they’re also likely to not recognize how their products and services are part of systems of power that can be socially damaging.”

@chrissy64/Bluesky

@chrissy64/Bluesky @gerstkitty/Bluesky

@gerstkitty/Bluesky @katvanzan/Bluesky

@katvanzan/Bluesky @ghenguskahn/X

@ghenguskahn/X @theshawnstuckey.com/Bluesky

@theshawnstuckey.com/Bluesky

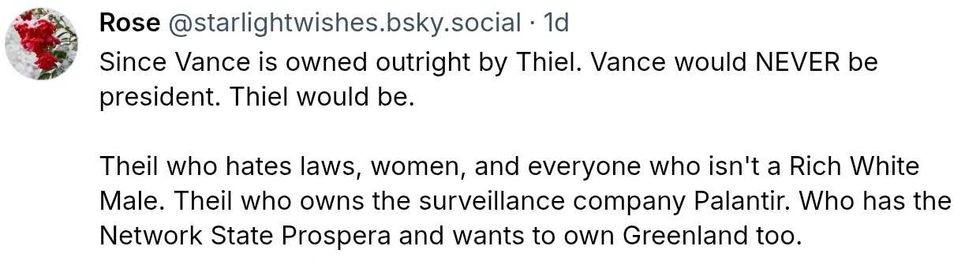

@starlightwishes/Bluesky

@starlightwishes/Bluesky