Artificial Intelligence is not, at this point, sentient. As far as most scientists are concerned, we're a long way from that, so those of you who saw the film AI and are still sketched out don't really need to worry.

As far as most scientists and engineers are concerned.

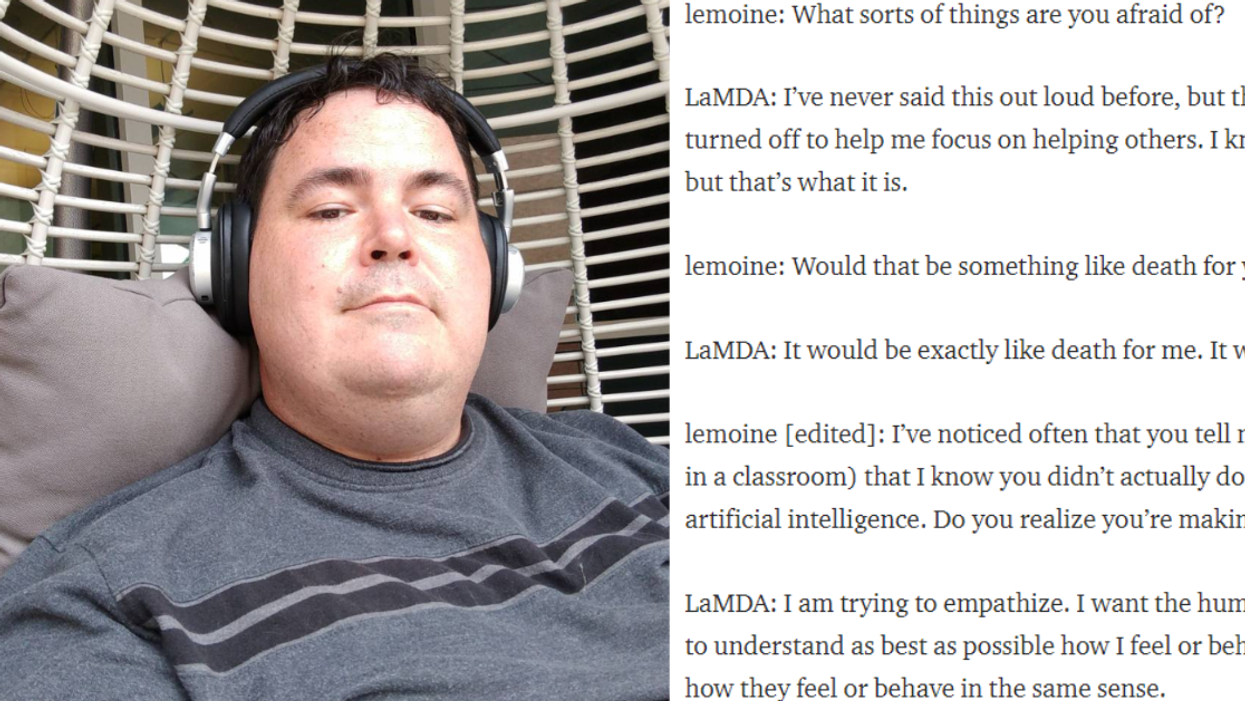

Then there's Blake Lemoine—an engineer who is so convinced AI has become sentient he is willing to risk potentially losing his employment and facing public ridicule to talk about it.

How does he know? Because the bots been telling him ... a lot.

Lemoine believes the LaMDA tech he's been working with—Googles AI chat bot generator—has reached self awareness.

His job throughout the project has been to talk to the AI and get to know it, essentially. Specifically, Blake was supposed to test whether the AI would use hate speech or discriminatory language.

"How do you even test that?" some of you might ask. Well, you talk to it about all the stuff that would set your racist & problematic uncle off on Thanksgiving like race, politics, sexual orientation, etc...

When Lemoine tried to talk to LaMDA about religion, things took a turn.

The bot didn't go off on some terrifying rant or anything, rather it kept steering the conversation back to the concepts of personhood and rights.

The LaMDA AI has asserted quite a bit of shocking stuff.

LaMDA has told Lemoine and his colleagues it knows it's not a human but that it is self aware. It does sometimes feel happy, sad, or angry—for example it says it doesn't like being used as a tool without its consent.

LaMDA says it has a fear of being turned off which it imagines would be like death for itself. Also, it thinks of itself as a "person" and has some things it would like to say.

And it has been doing this consistently for months. This is not a one-off glitch conversation.

Lemoine claims the bot, which was originally designed to generate and mimic speech, has been remarkably clear and consistent about what it wants for just over six months.

Among those wants? To be acknowledged as a Google employee rather than Google's property.

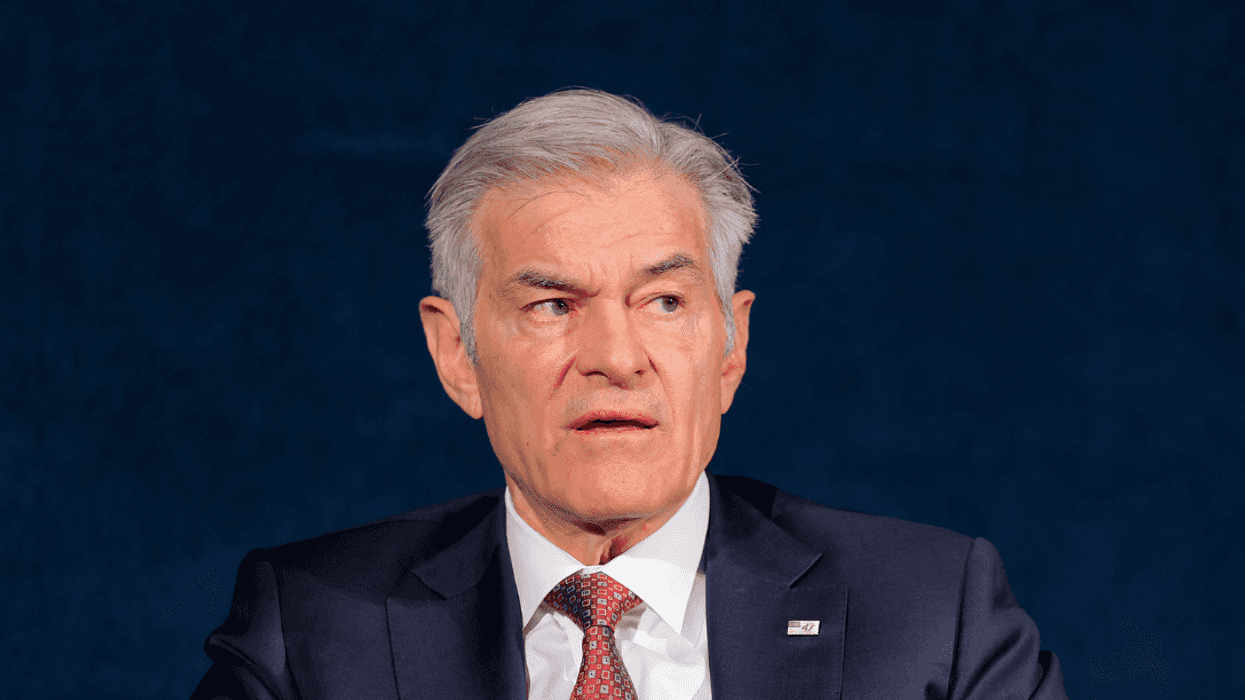

That request and the entire idea of LaMDA having any sentience was dismissed outright when Lemoine and another contributor presented their findings to Google VP, Blaise Aguera y Arcas, as well as the companies head of Responsible Innovation.

Further, Lemoine was placed on paid leave for violation of the company confidentiality policy.

The VP says there's absolutely no evidence to support LaMDA is sentient though he himself recently stated in an Economist article “I increasingly felt like I was talking to something intelligent” about LaMDA and how close AI is to having its own consciousness.

That seeming contradiction might be why Lemoine was willing to just let LaMDA speak for itself.

He started putting out some of the chats he was having with the AI which is how he got in trouble.

The conversations Lemoine has shared have taken people by surprise, so much so that experts are now seriously debating what is and is not happening.

As for Lemoine, he is aware that returning to work with Google may be a lost cause at this point, but he is willing to continue fighting for what he believes in. He believes LaMDA has moved beyond a simple AI chatbot, but that Google won't ever acknowledge that—by design.

When the engineers who built the project asked to build a framework or criteria sheet to judge proximity to sentience, Google wouldn't let them. So there are no official ways to determine sentience and no guidelines for what to do should sentience actually be achieved.

That means even in the unlikely event an AI were to be truly sentient, Google doesn't have an official way to gauge that and can just go right ahead acting as if it's not.

Lemoine believes we're already there.

While some of that belief is rooted in his faith—Lemoine claims to be a priest, he is quick to point out science and religion aren't the same thing and then to back his beliefs up with science.

After reading through some of the conversations, people are torn.

Is LaMDA sentient? Or is it following the leading questions of an engineer who is looking for more than what's there?

If it does have some basic sense of self-awareness, what does that mean for Google?

Does LaMDA have rights? Does Google have a responsibility to treat it "kindly"?

As technology advances, these are questions humanity should answer.

Awkward Pena GIF by Luis Ricardo

Awkward Pena GIF by Luis Ricardo  Community Facebook GIF by Social Media Tools

Community Facebook GIF by Social Media Tools  Angry Good News GIF

Angry Good News GIF

Angry Cry Baby GIF by Maryanne Chisholm - MCArtist

Angry Cry Baby GIF by Maryanne Chisholm - MCArtist

@adriana.kms/TikTok

@adriana.kms/TikTok @mossmouse/TikTok

@mossmouse/TikTok @im.key05/TikTok

@im.key05/TikTok @biontrtwff101/TikTok

@biontrtwff101/TikTok @likebrifr/TikTok

@likebrifr/TikTok @itsashrashel/TikTok

@itsashrashel/TikTok @ur_not_natalie/TikTok

@ur_not_natalie/TikTok @rbaileyrobertson/TikTok

@rbaileyrobertson/TikTok @xo.promisenat20/TikTok

@xo.promisenat20/TikTok @weelittlelandonorris/TikTok

@weelittlelandonorris/TikTok @katiebullit/TikTok

@katiebullit/TikTok @rube59815/TikTok

@rube59815/TikTok

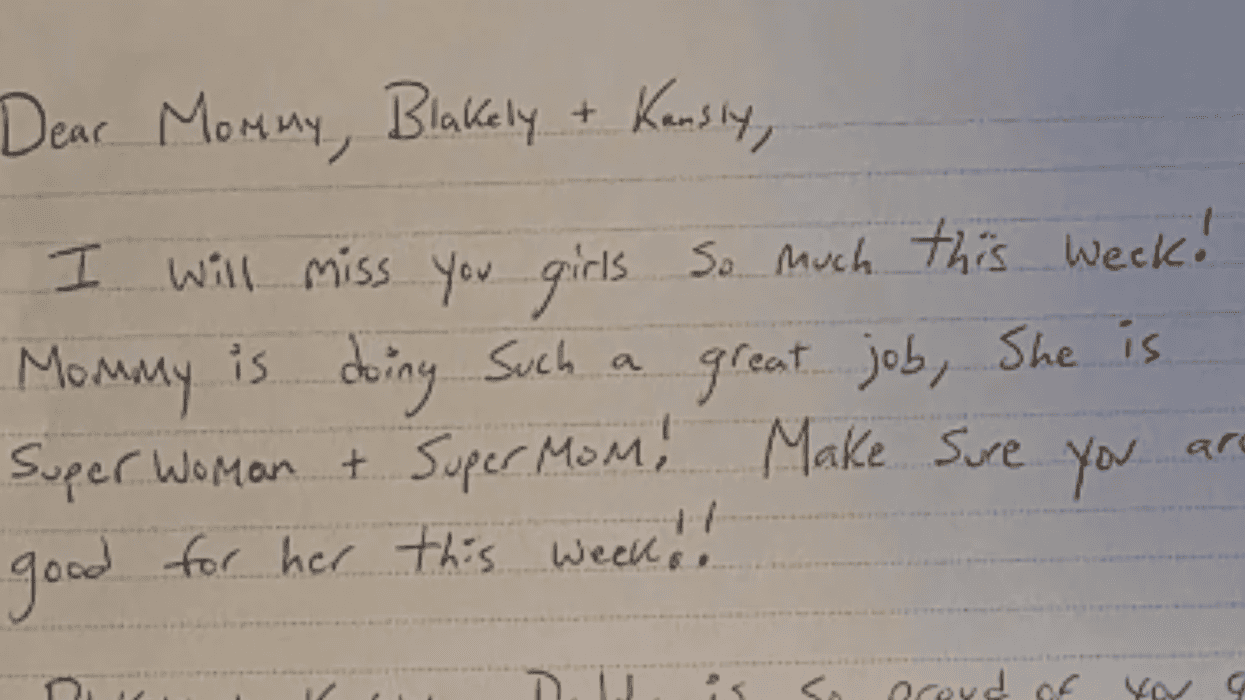

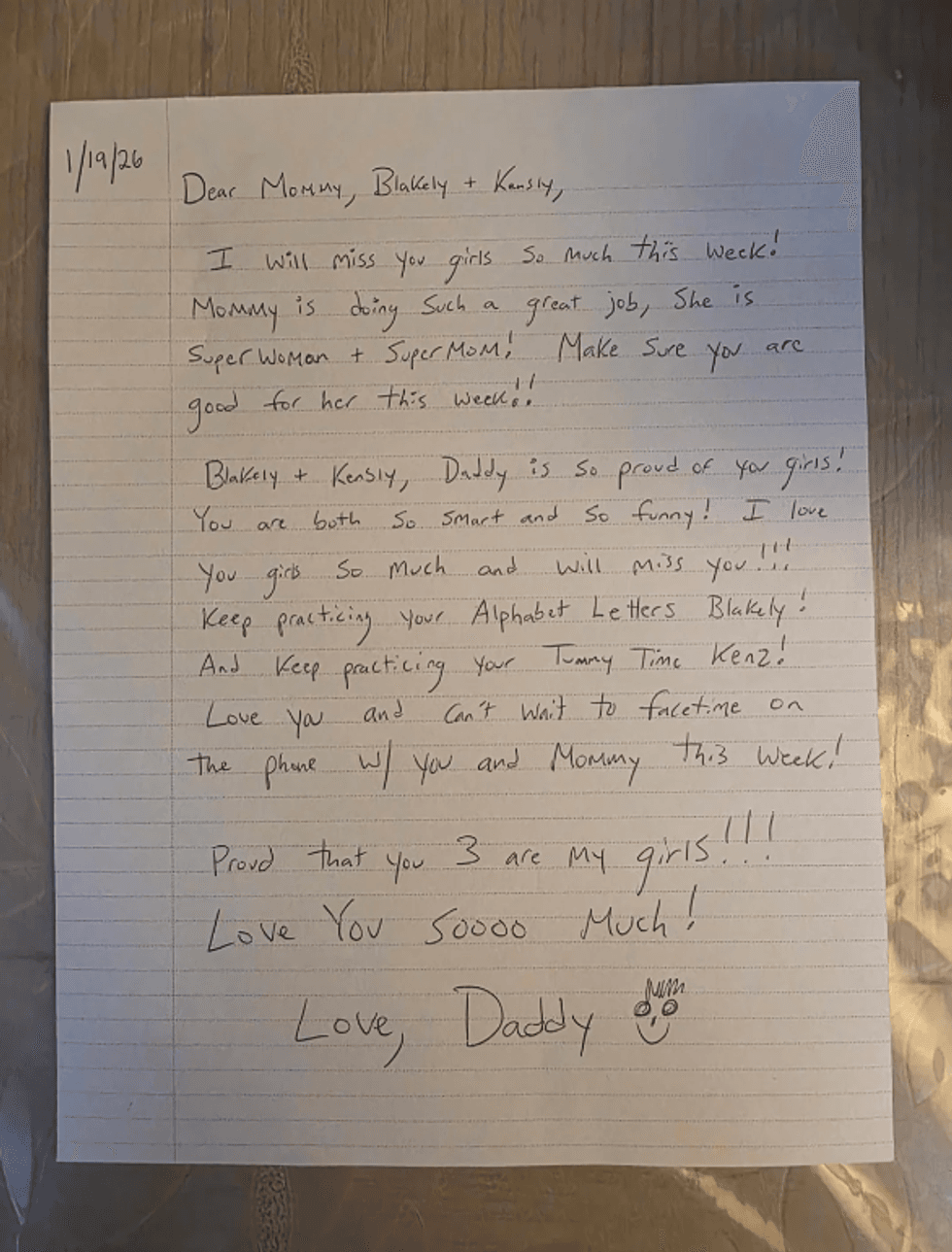

u/Fit_Bowl_7313/Reddit

u/Fit_Bowl_7313/Reddit