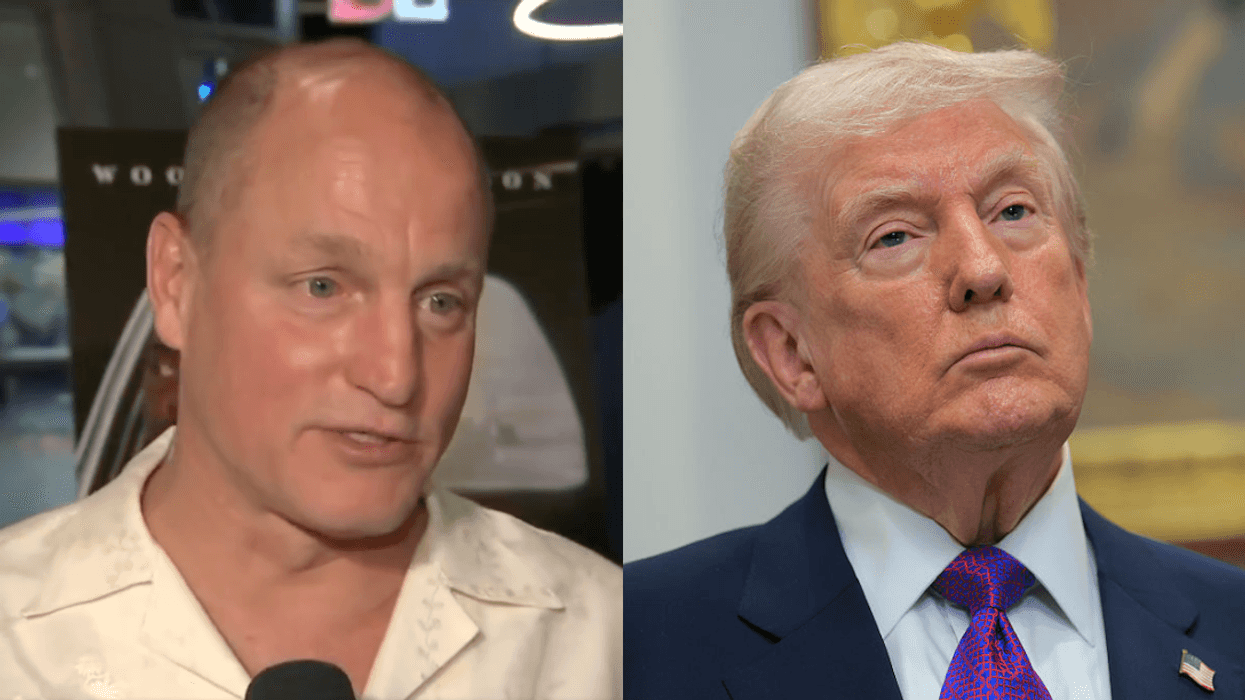

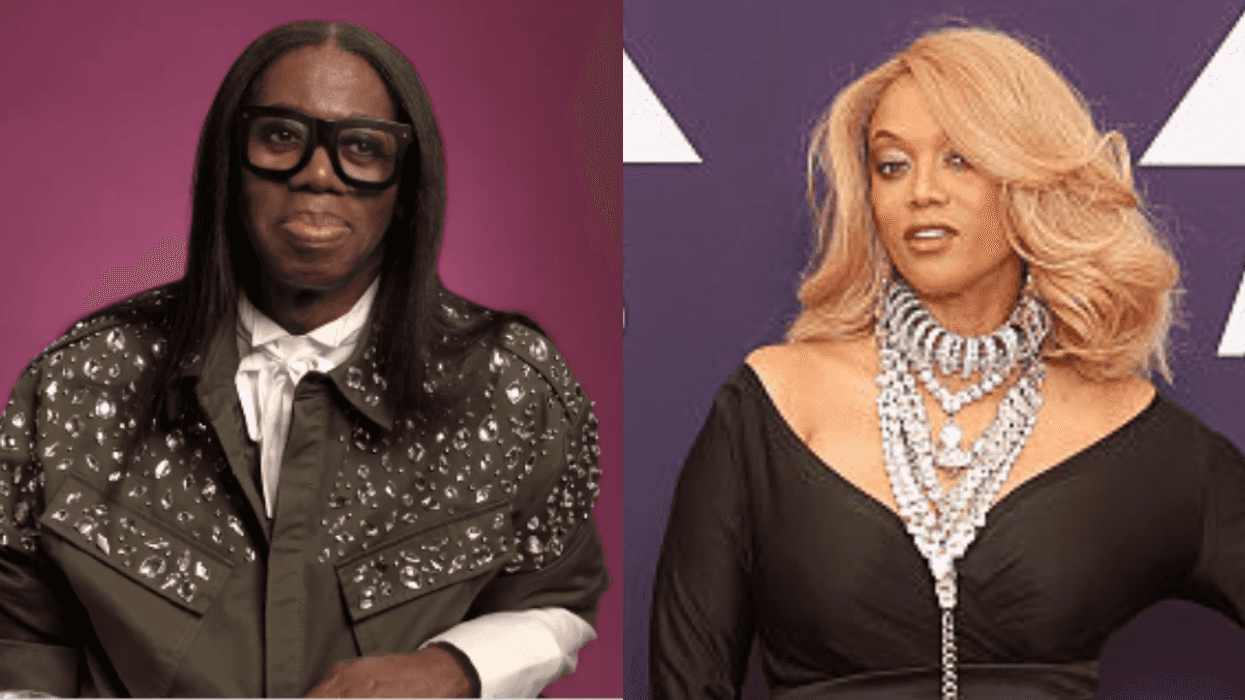

In the ongoing saga unfolding between a 54 year old conservative radio and Fox News host once dubbed "Right-Wing Radio’s High Priestess of Hate" and an outspoken 17 year old survivor of the Parkland school mass shooting, a new player has entered the fray: Russian Twitter bots.

A Twitter bot is software that sends out automated posts on Twitter. Most bots work simply, programmed to send out tweets periodically or respond to instances of specific phrases or hashtags.

Russian-linked Twitter accounts rallied around Laura Ingraham to further foment discord online over the weekend. The hashtag #IstandwithLaura jumped 2,800 percent in 48 hours. On Saturday night, it was the top trending hashtag among Russian campaigners, according to the Russian Twitter propaganda tracking website Hamilton 68.

Ranking in the top 6 Twitter usernames tweeted by accounts identified with Russian propaganda were @ingrahamangle, @davidhogg111 and @foxnews. The top two-word phrases used were “Laura Ingraham” and "David Hogg". The website botcheck.me, tracking 1,500 “political propaganda bots,” compiled the data.

“This is pretty typical for them, to hop on breaking news like this,” Jonathon Morgan, CEO of New Knowledge stated. New Knowledge tracks online disinformation campaigns.

The bots focus on anything that is divisive for Americans. Almost systematically.”

Sowing discord, especially in the USA, appears to be a key strategy for the Russian Twitter bots. By seizing on any hot button issues online, they attempt to bolster the impact of the side with fewer organic support from real humans living in the United States.

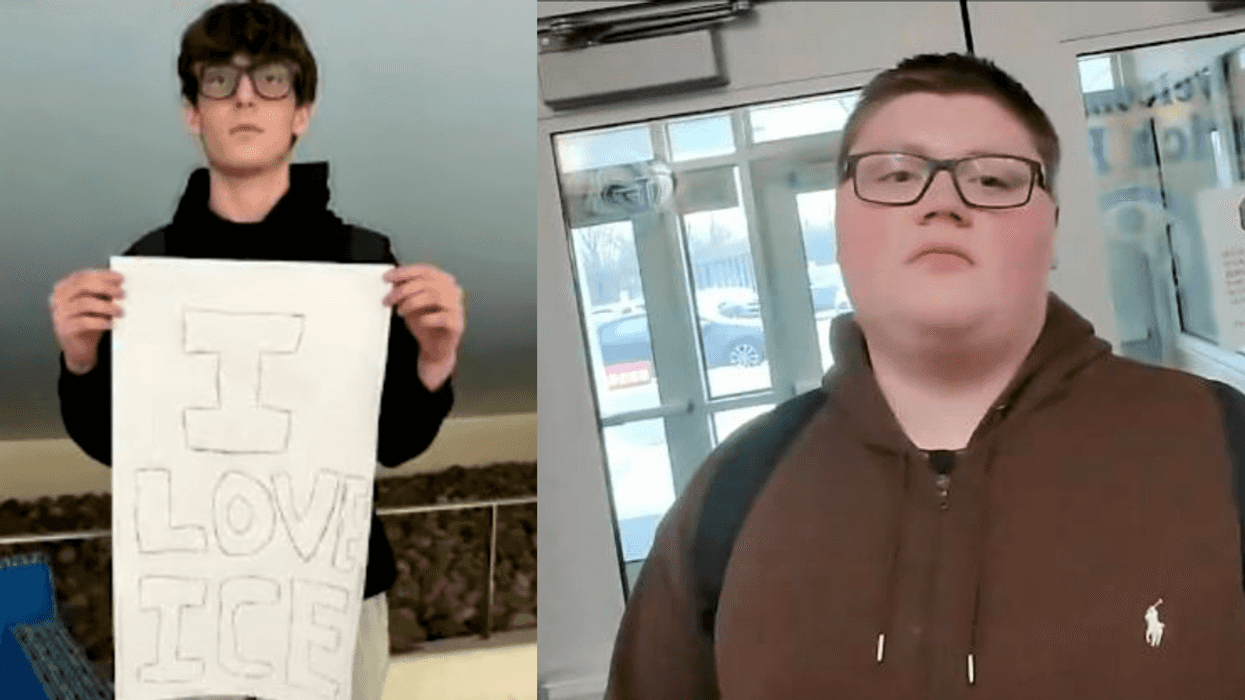

Researchers studying Russian propaganda bots found in the days after the tragedy that killed 17 people at Marjory Stoneman Douglas High School, #Parkland, #guncontrolnow, #Florida and #guncontrol were among the top hashtags used by Russia-linked accounts.

Since the Parkland school shooting, Russian bots flooded Twitter with conspiracy theories and fake claims about the murders, and the survivors, to rally actual humans to the side of the debate on gun control that had less real support online.

As the boycott of Ingraham sponsors grew, under the hashtag #BoycottIngrahamAdverts, it drew the attention of the Russian propaganda machine. 16 advertisers, as of Saturday, took steps to drop Ingraham's programming from their advertising lineup.

That momentum prompted programming of Russia's Twitter bots to tweet out support of the opposing view. In this case, it is not a matter of support for one cause over another by Russian interests. It is instead a balancing to try to prolong conflicts that divide United States citizens.

Bots distort issues. Russia employs them to make a phrase or hashtag trend, to amplify or attack a message or article, or to harass other users.

So how can you tell when you are dealing with a bot attack or distortion?

The Atlantic Council's Digital Forensic Research Lab (DFRL) offers tips for spotting a bot:

- Frequency: Bots are prolific posters, more frequently they post the more caution should be shown. DFRL classifies 72 posts per day suspicious, more than 144 per day highly suspicious.

- Anonymity: Bots often lack personal information. Accounts have generic profile pictures and political slogans as "bios".

- Amplification: A bot's timeline consists mostly of re-tweets and verbatim quotes, with few originally worded posts.

- Common content: Identify networks of bots by multiple profiles tweeting the same content almost simultaneously.

The Digital Forensic Research Lab's full list of tips are available online.

@lamorne/Instagram

@lamorne/Instagram @bigbeaubrown/Instagram

@bigbeaubrown/Instagram @musiccitykristy/Instagram

@musiccitykristy/Instagram @phil_torres/Instagram

@phil_torres/Instagram @vbarreiro/Instagram

@vbarreiro/Instagram @franklinjleonard/Instagram

@franklinjleonard/Instagram @br1an02/Instagram

@br1an02/Instagram @ohhelloitsmax/Instagram

@ohhelloitsmax/Instagram @frecklesmarie/Instagram

@frecklesmarie/Instagram

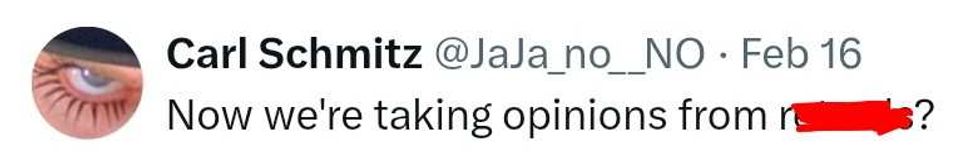

@JaJa_no_NO/X

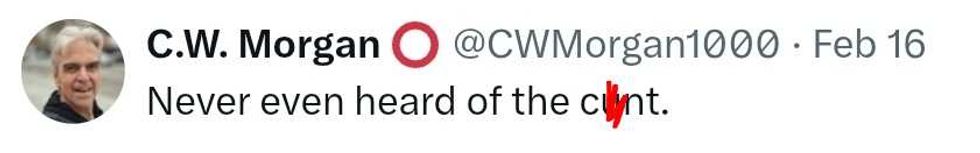

@JaJa_no_NO/X @CWMorgan1000/X

@CWMorgan1000/X reply to @spain2323/Instagram

reply to @spain2323/Instagram reply to @spain2323/Instagram

reply to @spain2323/Instagram reply to @spain2323/Instagram

reply to @spain2323/Instagram reply to @spain2323/Instagram

reply to @spain2323/Instagram reply to @spain2323/Instagram

reply to @spain2323/Instagram reply to @spain2323/Instagram

reply to @spain2323/Instagram reply to @spain2323/Instagram

reply to @spain2323/Instagram reply to @spain2323/Instagram

reply to @spain2323/Instagram reply to @spain2323/Instagram

reply to @spain2323/Instagram reply to @spain2323/Instagram

reply to @spain2323/Instagram reply to @spain2323/Instagram

reply to @spain2323/Instagram reply to @spain2323/Instagram

reply to @spain2323/Instagram reply to @spain2323/Instagram

reply to @spain2323/Instagram reply to @spain2323/Instagram

reply to @spain2323/Instagram reply to @spain2323/Instagram

reply to @spain2323/Instagram reply to @spain2323/Instagram

reply to @spain2323/Instagram