Autonomous AI and weapons may have a future. Just not together.

As of August 2018, more than 2400 high-impact players in science and technology — from SpaceX CEO Elon Musk to late astrophysicist Stephen Hawking — have signed a Lethal Autonomous Weapons Pledge declaring their intentions to halt an autonomous AI arms race before it begins. The historic motion urges governments to consider instituting regulations that preemptively ban, deter, and monitor militarized nations from amassing Lethal Autonomous Weapon Systems (LAWS): a growing classification of automated weaponry, including unmanned drones, fighter jets, and any lethal AI endowed with decisive power over human life.

Authored by The Future of Life Institute (FLI), the pledge warns against the cataclysmic potential of developing LAWS to autonomously identify and exterminate a human target. Independent from the pledge, 26 countries in the United Nations have explicitly endorsed a ban on LAWS, including Argentina, China, Iraq, Mexico and Pakistan.

Last year at the International Joint Conference on Artificial Intelligence (IJCAI), one of the world’s leading AI conferences, FLI’s AI and robotics researchers released an open letter calling for the initial ban on LAWS to avert a “third revolution in warfare.” The letter instigated the United Nations to prevent autonomous AI technologies from being used as “sanctioned instruments of violent oppression.”

However, as AI integrates daily into global defense initiatives, spending on national machine-learning programs has only increased. Passed by US Congress in the last few weeks, the Pentagon’s Project Maven — a program designed to categorize objects in drone imagery — received a 580% funding increase under President Trump who signed the $717 billion National Defense Authorization Act (NDAA).

Described as an outreach organization protecting humanity against destructive technologies, FLI’s utopic mission hopes to “catalyze and support research initiatives for safeguarding life, developing optimistic visions of the future...including positive ways for humanity to steer its own course considering new technologies and challenges.” FLI’s founders commit to “neither participate in nor support the development, manufacture, trade, or use of lethal autonomous weapons.” In a world without LAWS, autonomous AI could better resolve societal challenges, such as resource management, energy renewal, environmental conservation, and stabilizing evolving difficulties posed by the ongoing global financial crisis.

One of the pledge’s first signatories, Stuart Russell, a leading AI scientist at the University of California in Berkeley, believes manufacturing LAWS devastates basic human security and freedom: “It is not science fiction. In fact, it is easier to achieve than self-driving cars, which require far higher standards of performance. Our AI systems must do what we want them to do…keeping artificial intelligence beneficial.”

Elon Musk, alongside Facebook’s Mark Zuckerberg, is one of many entrepreneurs funding “beneficial” AI in joint machine-learning ventures like Vicarious FPC: a company building a replicable neural network of the brain’s neocortex that controls vision, body movement, and language functions. Google’s DeepMind, with over $400 million invested to date, currently leads the private sector in AI development aimed toward making a positive impact.

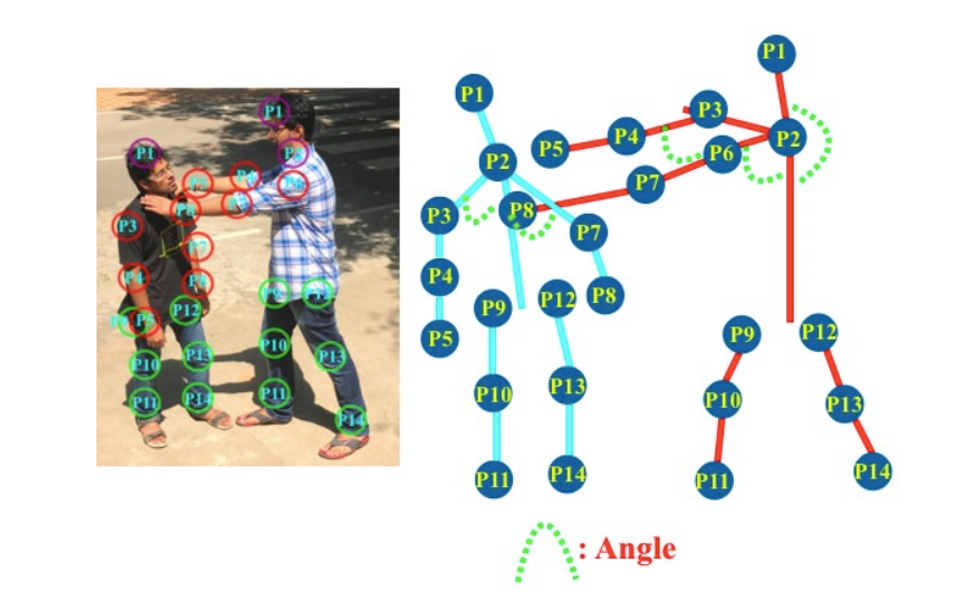

However, while not intended for LAWS, neuro-inspired learning algorithms used in Facebook’s current face-recognition software, Apple & Samsung’s smartphone personal assistants, and Google’s self-driving cars lend themselves perfectly to lethal AI applications. In India and the UK, researchers have similarly flown drones employing image recognition algorithms that scan video footage to permissively fire on “violent” targets.

Non-governmental coalitions, such as the Human Rights Watch & Campaign to Stop Killer Robots, address LAWS as new “weapons of mass destruction,” indicating fears that warring nations and rogue terrorists alike would automate genocide at a horrific pace. Taking human life should never be solely delegated or decided by a machine intelligence. As autonomous AI reframes modern-day perspectives on military, industrial, and economic landscapes, policymakers must contemplate the long-term moral and geopolitical consequences of engaging human targets without constant oversight.

The FLI’s pledge concludes that building and deploying LAWS expedites an unprecedented level of catastrophe: “Once developed, lethal autonomous weapons will permit armed conflict to be fought at a scale greater than ever, and at timescales faster than humans can comprehend. These can be weapons of terror, weapons that despots and terrorists use against innocent populations, and weapons hacked to behave in undesirable ways.”

The monumental steps taken toward proactively regulating LAWS are not only altruistic, but heralds a universal and existential responsibility to avoid “summoning a demon” before it’s too late. Once opened, leading experts are unanimous: this Pandora’s box can’t be closed.

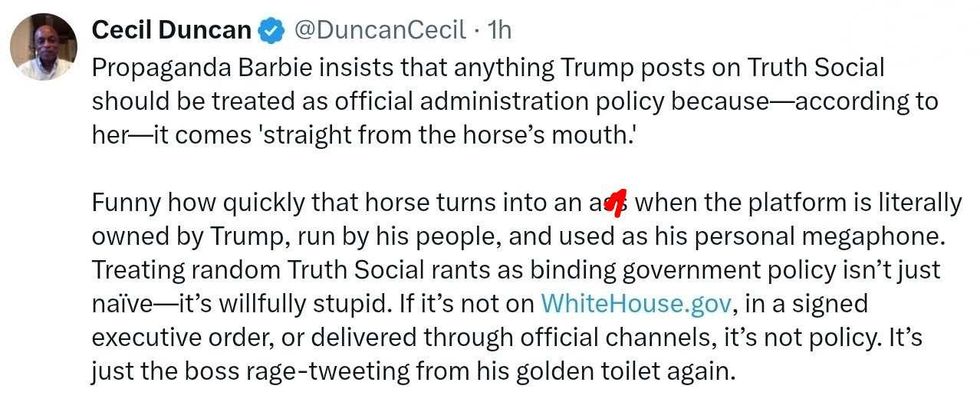

@DuncanCecil/X

@DuncanCecil/X @@realDonaldTrump/Truth Social

@@realDonaldTrump/Truth Social @89toothdoc/X

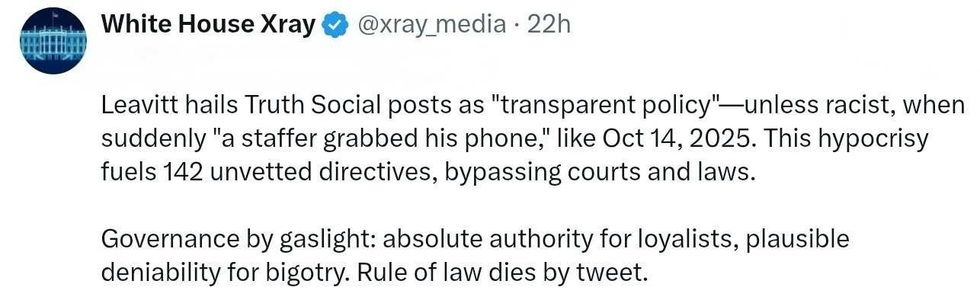

@89toothdoc/X @xray_media/X

@xray_media/X @CHRISTI12512382/X

@CHRISTI12512382/X

@sza/Instagram

@sza/Instagram @laylanelli/Instagram

@laylanelli/Instagram @itssharisma/Instagram

@itssharisma/Instagram @k8ydid99/Instagram

@k8ydid99/Instagram @8thhousepath/Instagram

@8thhousepath/Instagram @solflwers/Instagram

@solflwers/Instagram @msrosemarienyc/Instagram

@msrosemarienyc/Instagram @afropuff1/Instagram

@afropuff1/Instagram @jamelahjaye/Instagram

@jamelahjaye/Instagram @razmatazmazzz/Instagram

@razmatazmazzz/Instagram @sinead_catherine_/Instagram

@sinead_catherine_/Instagram @popscxii/Instagram

@popscxii/Instagram